List items

Items from the current list are shown below.

Gecko

11 Jul 2024 : Day 285 #

This morning I'm continuing my analysis of how the browser responds to the getUserMedia:request notification. I've been taking a second look at the three methods we were examining yesterday. I'm going to be referring back to them today, so it may be worth having them to hand if you want to follow along.

There are a few things that I want to note about them. First is that they're all triggered in the same way, namely in response to the getUserMedia:request notification. This notification is sent from the C++ code in MediaManager.cpp and picked up by the observer in WebRTCChild.jsm in the upstream code and in EmbedLiteWebrtcUI.js on the Sailfish browser.

On the Sailfish OS side we can see the case that filters for this message in the snippet I posted yesterday. But I skipped that part on the upstream snippets. I think it's worth taking a look at it though, just to tie everything together:

On Sailfish OS a similar thing happens but with a different observer in a different source file. The main difference is that there's no handleGUMRequest() method that gets called. Instead the code is executed inline inside the switch statement.

This is one of two main differences between the two. The other main difference is due to what happens inside that code. The upstream code opens a prompt like this on ESR 78:

Most of the parameters needed by our Sailfish OS code have stayed the same between ESR 78 and ESR 91 in the upstream code. That covers contentWindow, aSubject.callID, constraints and aSubject.isSecure.

That leaves just the devices parameter that has changed and that we need to worry about. Let's examine a bit more closely what's going on with this. In both ESR 78 and the Sailfish OS code we essentially had the following, where I've simplified things to remove all of the obfuscatory cruft.

In ESR 91 the promise is no longer needed: it's presumably been refactored higher up the call chain so that the devices value has already been resolved before the getUserMedia:request notification is sent out. Rather than us having to request the value asynchronously, in ESR 91 it's passed directly as part of the notification. That's why we have devices in ESR 78 and aSubject.devices in ESR 91.

This is true for ESR 91 upstream, but that means it's also likely to be true for our Sailfish OS version that's downstream of ESR 91. We should therefore, in theory, be able to do something like this:

When I do I still get an error, but it's different this time:

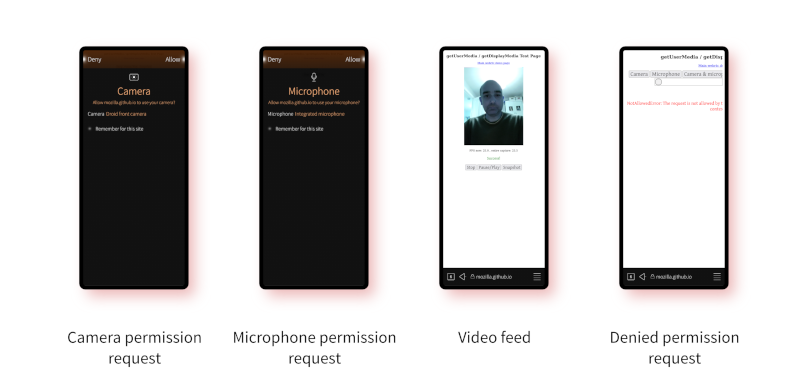

And it does! Now when I visit the getUserMedia example and select to use the camera I get the usual nice Sailfish OS permissions request page appearing. If I grant the permission, the camera even works!

Denying the permissions results in an error showing on the web page stating that the request was not allowed, which is exactly what should happen. The only wrinkle is when I try to use the microphone. The correct permissions page appears, but if I allow access I then get the following error output to the console:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

There are a few things that I want to note about them. First is that they're all triggered in the same way, namely in response to the getUserMedia:request notification. This notification is sent from the C++ code in MediaManager.cpp and picked up by the observer in WebRTCChild.jsm in the upstream code and in EmbedLiteWebrtcUI.js on the Sailfish browser.

On the Sailfish OS side we can see the case that filters for this message in the snippet I posted yesterday. But I skipped that part on the upstream snippets. I think it's worth taking a look at it though, just to tie everything together:

// This observer is called from BrowserProcessChild to avoid

// loading this .jsm when WebRTC is not in use.

static observe(aSubject, aTopic, aData) {

switch (aTopic) {

case "getUserMedia:request":

handleGUMRequest(aSubject, aTopic, aData);

break;

[...]

}

}

There's nothing magical going on here. The notification is fired, it's picked up by the observer and one of the handleGUMRequest() methods that I shared yesterday is executed depending on whether we're running ESR 78 or ESR 91.On Sailfish OS a similar thing happens but with a different observer in a different source file. The main difference is that there's no handleGUMRequest() method that gets called. Instead the code is executed inline inside the switch statement.

This is one of two main differences between the two. The other main difference is due to what happens inside that code. The upstream code opens a prompt like this on ESR 78:

prompt(

contentWindow,

aSubject.windowID,

aSubject.callID,

constraints,

devices,

secure,

isHandlingUserInput

);

This aligns with some equivalent but different code used to open a prompt on Sailfish OS:

EmbedLiteWebrtcUI.prototype._prompt(

contentWindow,

aSubject.callID,

constraints,

devices,

aSubject.isSecure);

}

On upstream ESR 91 we also have some code that does something similar. There are differences here as well though, most notably the parameters that are passed in and where they come from.

prompt(

aSubject.type,

contentWindow,

aSubject.windowID,

aSubject.callID,

constraints,

aSubject.devices,

aSubject.isSecure,

aSubject.isHandlingUserInput

);

The part of this we're particularly interesting in is where the parameters are coming from, and we're especially concerned with the parameters that are needed for the _prompt() call on Sailfish OS.Most of the parameters needed by our Sailfish OS code have stayed the same between ESR 78 and ESR 91 in the upstream code. That covers contentWindow, aSubject.callID, constraints and aSubject.isSecure.

That leaves just the devices parameter that has changed and that we need to worry about. Let's examine a bit more closely what's going on with this. In both ESR 78 and the Sailfish OS code we essentially had the following, where I've simplified things to remove all of the obfuscatory cruft.

contentWindow.navigator.mozGetUserMediaDevices(

function(devices) {

prompt(devices);

},

function(error) {[...]},

);

Although we don't have a Promise object, this is nevertheless essentially a promise on devices. The call to mozGetUserMediaDevices() will eventually either resolve to call the prompt() method with the fulfilled value for devices or it will call the error method.In ESR 91 the promise is no longer needed: it's presumably been refactored higher up the call chain so that the devices value has already been resolved before the getUserMedia:request notification is sent out. Rather than us having to request the value asynchronously, in ESR 91 it's passed directly as part of the notification. That's why we have devices in ESR 78 and aSubject.devices in ESR 91.

This is true for ESR 91 upstream, but that means it's also likely to be true for our Sailfish OS version that's downstream of ESR 91. We should therefore, in theory, be able to do something like this:

case "getUserMedia:request":

// Now that a getUserMedia request has been created, we should check

// to see if we're supposed to have any devices muted. This needs

// to occur after the getUserMedia request is made, since the global

// mute state is associated with the GetUserMediaWindowListener, which

// is only created after a getUserMedia request.

GlobalMuteListener.init();

let constraints = aSubject.getConstraints();

let contentWindow = Services.wm.getOuterWindowWithId(aSubject.windowID);

EmbedLiteWebrtcUI.prototype._prompt(

contentWindow,

aSubject.callID,

constraints,

aSubject.devices,

aSubject.isSecure);

break;

The good news about this is that the EmbedLiteWebrtcUI.js file is contained in the embedlite-components project, built separately from the gecko packages. This project is incredibly swift to compile, so I can test these changes almost immediately.When I do I still get an error, but it's different this time:

JavaScript error: file:///usr/lib64/mozembedlite/components/

EmbedLiteWebrtcUI.js, line 295: ReferenceError: GlobalMuteListener is not

defined

Looking back at the file I can see that GlobalMuteListener is a class defined in WebRTCChild.jsm. I may need to do something with this, but just to test things, I'm commenting out the line where it's used so as to see whether it makes any difference.And it does! Now when I visit the getUserMedia example and select to use the camera I get the usual nice Sailfish OS permissions request page appearing. If I grant the permission, the camera even works!

Denying the permissions results in an error showing on the web page stating that the request was not allowed, which is exactly what should happen. The only wrinkle is when I try to use the microphone. The correct permissions page appears, but if I allow access I then get the following error output to the console:

JavaScript error: resource://gre/actors/AudioPlaybackParent.jsm, line 20:

TypeError: browser is null

I'll need to fix this, but not today. I'll take another look at this tomorrow and try to fix both the GlobalMuteListener error and this AudioPlaybackParent.jsm error. One further thing that's worth commenting on is the fact the video stream colours are a bit strange as you can see in the screenshot. From what I recall this isn't a new issue, the release version of the browser exhibits this as well. It'd be nice to get it fixed, but having thought about it, I'm not really sure what the problem is. Maybe a way to improve it will become apparent later.If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comments

Uncover Disqus comments