List items

Items from the current list are shown below.

Blog

All items from December 2014

31 Dec 2014 : Automarking Progress #

I've always hated marking. Of all the tasks that gravitate around the higher education process, like lecturing, tutoring, creating coursework specifications and writing exams, marking has always felt amongst the least rewarding. I understand its importance, both as a means of providing feedback (formative) and applying judgement (summative). But good feedback takes a great deal of time, and assigning a single number that could significantly impact a student's life chances also takes a great deal of responsibility. Multiply that by the size of a class, and it can become impossible to give it the time - and energy - it deserves.

Automation has always offered the prospect of a partial solution. My secondary-school maths teacher - who was a brilliant man and worth listening to - always said that maths was for the lazy. It uncovers routes to generalisations that reduce the amount of thinking and work needed to solve a problem. Programming is the practical embodiment of this. So if there's one area which needs the support of automation in higher education, it must be marking.

Back in 1995 when I was doing my degree in Oxford, they were already using automated marking for Maple coursework. When I started at Liverpool John Moores in 2004 I was pretty astonished that they weren't doing something similar for marking programming coursework. Roll on ten years and I'm still at LJMU, and programming coursework is still being marked by hand. We have 300 students on our first year programming module, so this is no small undertaking.

To the University's credit, they've agreed to provide funds as a Curriculum Enhancement Project to research into whether this can be automated, and I'm privileged to be working alongside my colleagues Bob Askwith, Paul Fergus and Michael Mackay to try to find out. As I've implied, there are already good tools out there to help with this, but every course has its own approach and requirements. Feedback is a particularly important area for us, so we can't just give a mark based on whether a program executes correctly and gives the right outputs.

For this reason while Google has spent the tail-end of 2014 evangelising about their self-driving cars, I've been busy setting my sites for automation slightly lower. If a computer can drive me to work, surely it's only right it should then do my work for me when I get there?

There are many existing approaches and tools, along with lots of literature to back it up. For example Ceilidh/CourseMarker (Higgins, Gray, Symeonidis, & Tsintsifas, 2005; Lewis & Davies, 2004), Try (Reek, 1989), HoGG (Morris, 2003), Sphere Engine (Cheang, Kurnia, Lim, & Oon, 2003), BOSS (Joy & Luck, 1999), GAME (Blumenstein, Green, Nguyen, & Muthukkumarasamy, 2004), CodeLab, ASSYST (Jackson & Usher, 1997) and others.

Unfortunately many of these existing tools don't seem to be available either publicly or commercially. For those that are, they're not all appropriate for what we need. CourseMarker looked promising, but its site is down and I've not been able to discover any other way to access it. CodeLab is a neat site, which our students would likely benefit from, but at present it wouldn't give us the flexibility we need to fit it in with our existing course structure. The BOSS online submission system looks very viable but deploying it and getting everyone using it would be quite an undertaking; it's something I definitely plan to look into further though. Finally Sphere Engine provides a really neat and simple way to test out programs. In essence it's a simple web service that you upload a source file to, which it then compiles and executes with a given set of inputs. It returns the generated output which can then be checked. It can do this for an astonishing array of language variants (around 65 at the last count: from Ada to Whitespace) and is also the engine that powers the fantastic Sphere Online Judge. Sphere Engine were very helpful when we contacted them, and the simplicity and flexibility of their service was a real draw. Consequently the approach we're developing uses Sphere Engine as the backend processor for our marking checks.

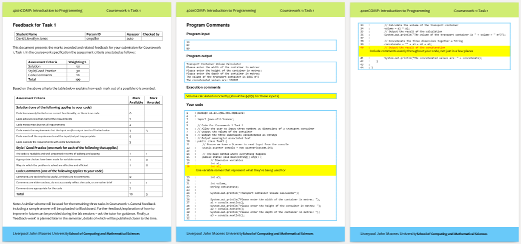

Compilation and input/output checks aren't our only concerns though. The feedback sheet we've been using for the last few years on the module covers code efficiency, good use of variable names, indentation and spacing, and appropriate commenting, as you can see in the example here.

With the aim of matching these as closely as possible, we're therefore applying a few other metrics: Comment statistics: Our automated approach doesn't measure comments, but rather the spacing between them. For Java code the following regular expression will find all of the comments as multi-line blocks: '/\*.*?\*/|//.*?$(?!\s*//)' (beautiful huh?!). The mean and standard deviation of the gap between all comments is used as a measure of quality. Obviously this doesn't capture the actual quality of the comments, but in my anecdotal experience, students who are commenting liberally and consistently are on the right tracks. Variable naming: Experience shows that students often use single letter or sequentially numbered variable names when they're starting out, as it feels far easier then inventing sensible names. In fact, given the first few programs they write are short and self-explanatory, this isn't unreasonable. But at this stage our job is really to teach them good habits (they'll have plenty of opportunity to break them later). So I've added a check to measure the length of variable names, and whether they have numerical postfixes by pulling variable declarations from the AST of the source code. Indentation: As any programmer knows, indentation is stylistic, unless you're using Python or Whitespace. Whether you tack your curly braces on the end of a line or give them a line of their own is a matter of choice, right? Wrong. Indentation is a question of consistency and discipline. Anything less than perfection is inexcusable! This is especially the case when just a few keypresses will provoke Eclipse into reformatting everything to perfection anyway. Okay, so I soften my stance a little with students new to programming, but in practice it's easiest for students to follow a few simple rules (Open a bracket: indent the line afterwards an extra tab. Close a bracket: indent its line one tab fewer. Everything else: indent it the same. Always use tabs, never spaces). These rules are easy to follow, and easy to test for, although in the tests I've implemented they're allowed to use spaces rather than tabs if they really insist. Efficient coding: This one has me a bit stumped. Maybe something like McCabe's cyclomatic complexity would work for this, but I'm not sure. Instead, I've lumped this one in as part of the correct execution marks, which isn't right, but probably isn't not too far off how it's marked in practice. Extra functionality: This is a non-starter as far as automarking's concerned, at least in the immediate future. Maybe someone will one day come up with a clever AI technique for judging this, but in the meantime, this mark will just be thrown away. Our automarking script performs all of these checks and spits out a marking sheet based on the feedback sheet we were previously filling out by hand. Here's an example:

As you can see, it's not only filling out the marks, but also adding a wodge of feedback based on the student's code at the end. This is a working implementation for the first task the students have to complete on their course. It's by far the easiest task (both in terms of assessment and marking), but the fact it's working demonstrates some kind of viability. I'm confident that most of the metrics will transfer reasonably elegantly to the later assessments too. There's a lot of real potential here. Based on the set of scripts I marked this year, the automarking process is getting within one mark of my original assessment 80% of the time (with discrepancy mean=1.15, SD=1.5). Rather than taking an evening to mark, it now takes 39.38 seconds. The ultimately goal is not just to simplify the marking process for us lazy academics, but also to provide better formative feedback to the students. If they're able to submit their code and get near-instant feedback before they submit their final coursework, then I'm confident their final marks will improve as well. Some may say this is a bit like cheating, but I've thought hard about this. Yes, it makes it easier for them to improve their marks. But their improved marks won't be chimeras, rather they'll be because the students will have grasped the concepts we've been trying to teach them. Personally I have no time for anyone who thinks it's a good idea to dumb down our courses, but if we can increase students' marks through better teaching techniques that ultimately improves their capabilities, then I'm all for it. As we roll into 2015 I'm hoping this exercise will reduce my marking load. If that sounds good for you too, feel free to contribute or join me for the ride: the automarking code is up on GitHub, and this is all new for me, so I have a lot to learn.

Comment

With the aim of matching these as closely as possible, we're therefore applying a few other metrics: Comment statistics: Our automated approach doesn't measure comments, but rather the spacing between them. For Java code the following regular expression will find all of the comments as multi-line blocks: '/\*.*?\*/|//.*?$(?!\s*//)' (beautiful huh?!). The mean and standard deviation of the gap between all comments is used as a measure of quality. Obviously this doesn't capture the actual quality of the comments, but in my anecdotal experience, students who are commenting liberally and consistently are on the right tracks. Variable naming: Experience shows that students often use single letter or sequentially numbered variable names when they're starting out, as it feels far easier then inventing sensible names. In fact, given the first few programs they write are short and self-explanatory, this isn't unreasonable. But at this stage our job is really to teach them good habits (they'll have plenty of opportunity to break them later). So I've added a check to measure the length of variable names, and whether they have numerical postfixes by pulling variable declarations from the AST of the source code. Indentation: As any programmer knows, indentation is stylistic, unless you're using Python or Whitespace. Whether you tack your curly braces on the end of a line or give them a line of their own is a matter of choice, right? Wrong. Indentation is a question of consistency and discipline. Anything less than perfection is inexcusable! This is especially the case when just a few keypresses will provoke Eclipse into reformatting everything to perfection anyway. Okay, so I soften my stance a little with students new to programming, but in practice it's easiest for students to follow a few simple rules (Open a bracket: indent the line afterwards an extra tab. Close a bracket: indent its line one tab fewer. Everything else: indent it the same. Always use tabs, never spaces). These rules are easy to follow, and easy to test for, although in the tests I've implemented they're allowed to use spaces rather than tabs if they really insist. Efficient coding: This one has me a bit stumped. Maybe something like McCabe's cyclomatic complexity would work for this, but I'm not sure. Instead, I've lumped this one in as part of the correct execution marks, which isn't right, but probably isn't not too far off how it's marked in practice. Extra functionality: This is a non-starter as far as automarking's concerned, at least in the immediate future. Maybe someone will one day come up with a clever AI technique for judging this, but in the meantime, this mark will just be thrown away. Our automarking script performs all of these checks and spits out a marking sheet based on the feedback sheet we were previously filling out by hand. Here's an example:

As you can see, it's not only filling out the marks, but also adding a wodge of feedback based on the student's code at the end. This is a working implementation for the first task the students have to complete on their course. It's by far the easiest task (both in terms of assessment and marking), but the fact it's working demonstrates some kind of viability. I'm confident that most of the metrics will transfer reasonably elegantly to the later assessments too. There's a lot of real potential here. Based on the set of scripts I marked this year, the automarking process is getting within one mark of my original assessment 80% of the time (with discrepancy mean=1.15, SD=1.5). Rather than taking an evening to mark, it now takes 39.38 seconds. The ultimately goal is not just to simplify the marking process for us lazy academics, but also to provide better formative feedback to the students. If they're able to submit their code and get near-instant feedback before they submit their final coursework, then I'm confident their final marks will improve as well. Some may say this is a bit like cheating, but I've thought hard about this. Yes, it makes it easier for them to improve their marks. But their improved marks won't be chimeras, rather they'll be because the students will have grasped the concepts we've been trying to teach them. Personally I have no time for anyone who thinks it's a good idea to dumb down our courses, but if we can increase students' marks through better teaching techniques that ultimately improves their capabilities, then I'm all for it. As we roll into 2015 I'm hoping this exercise will reduce my marking load. If that sounds good for you too, feel free to contribute or join me for the ride: the automarking code is up on GitHub, and this is all new for me, so I have a lot to learn.

14 Dec 2014 : Adafruit Backlights as Nightlights #

Yesterday I spent a fun and enlightening day at DoESLiverpool for their monthly Maker Day. It was my first time, and I'm really glad I went (if you live near Liverpool and fancy spending the day building stuff, I recommend it). I got loads of help from the other makers there, and at the end of the day I'd built a software-controllable blinking light and gained a new-found confidence for soldering (not bad for someone who's spent the last twenty years finding excuses to avoid using a soldering iron). Thanks JR, Jackie, Doris, Dan and everyone else I met on the day! Here's the little adafruit Trinket-controlled light (click to embiggen):

The light itself is an adafruit Backlight Module, an LED encased in acrylic that gives a nice consistent light across the surface. In the photos it looks pretty bright, and Molex1701 asked whether it'd be any good for a nightlight. Thanks for the question!

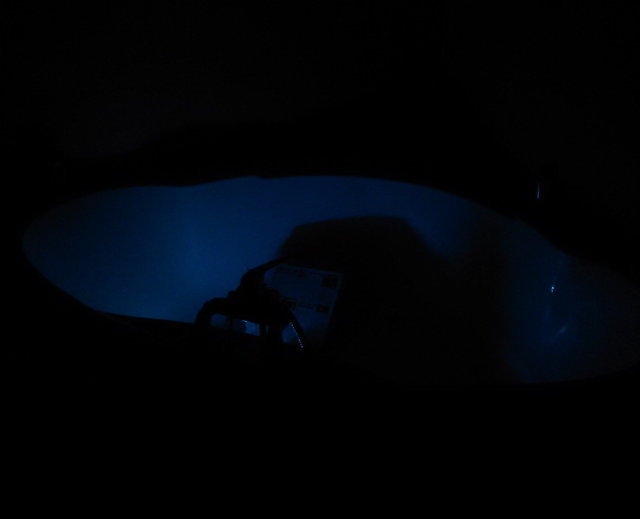

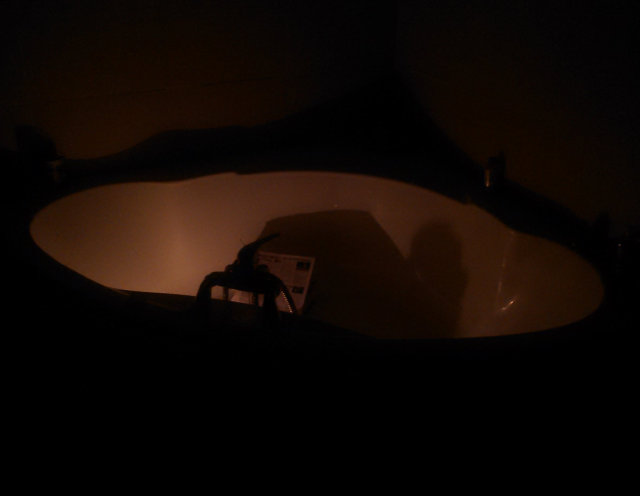

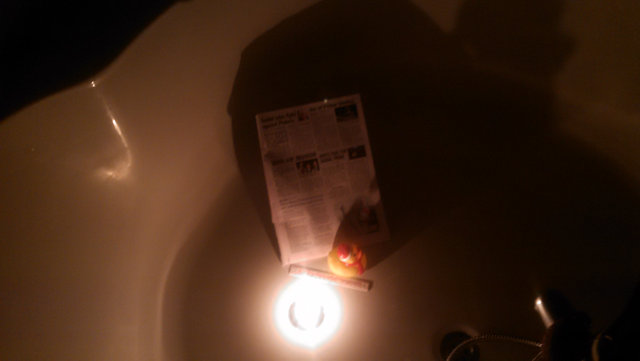

The only thing is I know nothing about lights and lumens and wouldn't trust my own judgement when wandering around in the semi-dark. So to answer the question I thought it'd be easiest to take a few photos. The only room in the flat where we get total darkness during the day is the bathroom, so I stuck the adafruit in the bath along with some helpful gubbins for reference (ruler, rubber duck, copy of Private Eye) and took some photos. As well as the backlight module, there are also some photos with the full light and a standard tealight (like in the photo above) for comparison. I reckon tealights must be a pretty universal standard for photon output levels.

These firsts three below (also clickable) show the same shot in different lighting conditions from afar. Respectively they're the main bathroom light, the backlight module, and a tealight.

Here are two close-up shots with backlight and tealight respectively.

As you can see from the results, the backlight isn't as bright as a tealight. Whether it'd be bright enough to use as a nightlight is harder to judge, but my inclination is to say it probably isn't. Maybe if you ran a couple of them side-to-side they'd work better. It's also worth noting the backlight module is somewhat directional. There is light seepage from the back of the stick, but most of the light comes out from one side and things are brighter when in line with it. It may also be worth saying something about power output. Yesterday JR, Doris and I measured the current going through it. The backlight was set up with 3.3V and drew 10 mA of current. The battery I'm using is a 150mAh Lithium Ion polymer battery, so I'm guessing the backlight should run for around 15 hours (??) on a single charge. Add in the power needed for the trinket and a pinch of reality salt and it's probably much less. Last night it ran from 8pm through to some time between 4am and 10am (it cut out while I was asleep), so that's between 8-14 hours. If you do end up building a nightlight from some of these Molex1701, please do share!

Comment

The light itself is an adafruit Backlight Module, an LED encased in acrylic that gives a nice consistent light across the surface. In the photos it looks pretty bright, and Molex1701 asked whether it'd be any good for a nightlight. Thanks for the question!

@llewelld @adafruit @DoESLiverpool How well do those screens light up with lights off? I was thinking of making a night light with one.

— Molex (@Molex1701) December 14, 2014Here are two close-up shots with backlight and tealight respectively.

As you can see from the results, the backlight isn't as bright as a tealight. Whether it'd be bright enough to use as a nightlight is harder to judge, but my inclination is to say it probably isn't. Maybe if you ran a couple of them side-to-side they'd work better. It's also worth noting the backlight module is somewhat directional. There is light seepage from the back of the stick, but most of the light comes out from one side and things are brighter when in line with it. It may also be worth saying something about power output. Yesterday JR, Doris and I measured the current going through it. The backlight was set up with 3.3V and drew 10 mA of current. The battery I'm using is a 150mAh Lithium Ion polymer battery, so I'm guessing the backlight should run for around 15 hours (??) on a single charge. Add in the power needed for the trinket and a pinch of reality salt and it's probably much less. Last night it ran from 8pm through to some time between 4am and 10am (it cut out while I was asleep), so that's between 8-14 hours. If you do end up building a nightlight from some of these Molex1701, please do share!