Not Found

Sorry, but I couldn't find the page that you requested. Maybe it's been lost? Or deleted? Or stolen?!

Click the 'back' button of your browser to return to where you came from, or alternatively, you can always return Home.

Blog

25 Apr 2024 : Day 227 #

Things started falling into place yesterday when the reasons for the crashes became clear. Although the value of embedlite.compositor.external_gl_context was being set correctly to different values depending on whether the running application was the browser or a WebView, the value was being reversed in the case of the browser because the WebEngineSettings::initialize() was being called twice. This initialize() method is supposed to be idempotent (which you have to admit, is a great word!), but due to a glitch in the logic turned out not to be.

The fix was to change the ordering of the execution: moving the place where isInitialized gets set to before the early return caused by the existence of a marker file. That all sounds a bit esoteric, but it was a simple change. I've now lined up all of the pieces so that:

But unfortunately not quite yet. After making these changes, the browser works fine, but I now get a crash when running the WebView application:

And that's exactly what has happened now. Without keeping track of the changes, I'm fairly certain I'd have got in a mess and forgotten I'd made these changes. Now I can reverse them easily.

Having made this reversal, build and installed the executable and run the code I'm very happy to see that:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

The fix was to change the ordering of the execution: moving the place where isInitialized gets set to before the early return caused by the existence of a marker file. That all sounds a bit esoteric, but it was a simple change. I've now lined up all of the pieces so that:

- embedlite.compositor.external_gl_context is set to true in WebEngineSettings::initialize().

- embedlite.compositor.external_gl_context is set to false in DeclarativeWebUtils::setRenderingPreferences().

- isInitialized is set in the correct place.

But unfortunately not quite yet. After making these changes, the browser works fine, but I now get a crash when running the WebView application:

Thread 37 "Compositor" received signal SIGSEGV, Segmentation fault.

[Switching to Thread 0x7f1f94d7e0 (LWP 7616)]

0x0000007ff1105978 in mozilla::gl::GLScreenBuffer::Size (this=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h:290

290 ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h: No

such file or directory.

(gdb) bt

#0 0x0000007ff1105978 in mozilla::gl::GLScreenBuffer::Size (this=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h:290

#1 mozilla::gl::GLContext::OffscreenSize (this=this@entry=0x7ee019aa70)

at gfx/gl/GLContext.cpp:2141

#2 0x0000007ff3664264 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

CompositeToDefaultTarget (this=0x7fc4ad3530, aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:156

#3 0x0000007ff12b48d8 in mozilla::layers::CompositorVsyncScheduler::

ForceComposeToTarget (this=0x7fc4c36e00, aTarget=aTarget@entry=0x0,

aRect=aRect@entry=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/layers/LayersTypes.h:

82

#4 0x0000007ff12b4934 in mozilla::layers::CompositorBridgeParent::

ResumeComposition (this=this@entry=0x7fc4ad3530)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/RefPtr.h:313

#5 0x0000007ff12b49c0 in mozilla::layers::CompositorBridgeParent::

ResumeCompositionAndResize (this=0x7fc4ad3530, x=<optimized out>,

y=<optimized out>,

width=<optimized out>, height=<optimized out>)

at gfx/layers/ipc/CompositorBridgeParent.cpp:794

#6 0x0000007ff12ad55c in mozilla::detail::RunnableMethodArguments<int, int,

int, int>::applyImpl<mozilla::layers::CompositorBridgeParent, void (mozilla:

:layers::CompositorBridgeParent::*)(int, int, int, int),

StoreCopyPassByConstLRef<int>, StoreCopyPassByConstLRef<int>,

StoreCopyPassByConstLRef<int>, StoreCopyPassByConstLRef<int>, 0ul, 1ul,

2ul, 3ul> (args=..., m=<optimized out>, o=<optimized out>)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/nsThreadUtils.h:1151

[...]

#18 0x0000007ff6a0289c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb)

Thinking back, or more precisely looking back through my diary entries the reason becomes clear. You may recall that back on Day 221 I made some changes in the hope of them fixing the browser rendering. The changes are summarised by this diff:

$ git diff -- gfx/layers/opengl/CompositorOGL.cpp

diff --git a/gfx/layers/opengl/CompositorOGL.cpp b/gfx/layers/opengl/

CompositorOGL.cpp

index 8a423b840dd5..11105c77c43b 100644

--- a/gfx/layers/opengl/CompositorOGL.cpp

+++ b/gfx/layers/opengl/CompositorOGL.cpp

@@ -246,12 +246,14 @@ already_AddRefed<mozilla::gl::GLContext> CompositorOGL::

CreateContext() {

// Allow to create offscreen GL context for main Layer Manager

if (!context && gfxEnv::LayersPreferOffscreen()) {

+ SurfaceCaps caps = SurfaceCaps::ForRGB();

+ caps.preserve = false;

+ caps.bpp16 = gfxVars::OffscreenFormat() == SurfaceFormat::R5G6B5_UINT16;

+

nsCString discardFailureId;

- context = GLContextProvider::CreateHeadless(

- {CreateContextFlags::REQUIRE_COMPAT_PROFILE}, &discardFailureId);

- if (!context->CreateOffscreenDefaultFb(mSurfaceSize)) {

- context = nullptr;

- }

+ context = GLContextProvider::CreateOffscreen(

+ mSurfaceSize, caps, CreateContextFlags::REQUIRE_COMPAT_PROFILE,

+ &discardFailureId);

}

[...]

Now that the underlying error is clear it's time to reverse this change. At the time I even noted the importance of these diary entries as a way of helping in case I might have to do something like this:

Things will get a bit messy the more I change, but the beauty of these diaries is that I'll be keeping a full record. So it should all be clear what gets changed.

And that's exactly what has happened now. Without keeping track of the changes, I'm fairly certain I'd have got in a mess and forgotten I'd made these changes. Now I can reverse them easily.

Having made this reversal, build and installed the executable and run the code I'm very happy to see that:

- The browser now works again, rendering and all.

- The WebVew app no longer crashes.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

24 Apr 2024 : Day 226 #

Having solved the mystery of the crashing browser, the question today is what to do about it. What I can't immediately understand is how in ESR 78 the value read off for the embedlite.compositor.external_gl_context preference is true when the browser is run, but false when the WebView app is run.

We can see the difference quite clearly from stepping through the AllocPLayerTransactionParent() method on ESR 78. First, this is what we see when running the browser. We can clearly observe that mUseExternalGLContext is set to true:

It turns out the browser startup sequence is a bit messy. The sequence it goes through (along with a whole bunch of other stuff) is to first call initialize(). This method has a guard inside it to prevent the content of the method being called multiple times and which looks like this:

But now comes the catch. A little later on in the execution sequence initialize() is called again. At this point, were the isInitialized value set to true the method would return early and the contents of the method would be skipped. All would be good. But the problem is that it's not set to true, it's still set to false.

That's because the code that sets it to true is at the end of the method and on first execution the method is returning early due to a separate check; this one:

The fact it's exiting early here makes sense. But I don't think it makes sense for the isInitialized variable to be left as it is. I think it should be set to true even if the method exits early at this point.

In case you want to see the full gory details for yourself (trust me: you don't!), here's the step through that shows this sequence of events:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

We can see the difference quite clearly from stepping through the AllocPLayerTransactionParent() method on ESR 78. First, this is what we see when running the browser. We can clearly observe that mUseExternalGLContext is set to true:

Thread 39 "Compositor" hit Breakpoint 2, mozilla::embedlite::

EmbedLiteCompositorBridgeParent::AllocPLayerTransactionParent (

this=0x7f809d5670, aBackendHints=..., aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:77

77 PLayerTransactionParent* p =

(gdb) n

80 EmbedLiteWindowParent *parentWindow = EmbedLiteWindowParent::From(

mWindowId);

(gdb) p mUseExternalGLContext

$2 = true

(gdb)

When stepping through the same bit of code when running harbour-webview, mUseExternalGLContext is set to false:

Thread 34 "Compositor" hit Breakpoint 1, mozilla::embedlite::

EmbedLiteCompositorBridgeParent::AllocPLayerTransactionParent (

this=0x7f8cb70d50, aBackendHints=..., aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:77

77 PLayerTransactionParent* p =

(gdb) n

80 EmbedLiteWindowParent *parentWindow = EmbedLiteWindowParent::From(

mWindowId);

(gdb) n

81 if (parentWindow) {

(gdb) p mUseExternalGLContext

$1 = false

(gdb)

How can this be? There must be somewhere it's being changed. I notice that the value is set in the embedding.js file. Could that be it?

// Make gecko compositor use GL context/surface provided by the application. pref("embedlite.compositor.external_gl_context", false); // Request the application to create GLContext for the compositor as // soon as the top level PuppetWidget is creted for the view. Setting // this pref only makes sense when using external compositor gl context. pref("embedlite.compositor.request_external_gl_context_early", false);The other thing I notice is that the value is set to true in declarativewebutils.cpp, which is part of the sailfish-browser code:

void DeclarativeWebUtils::setRenderingPreferences()

{

SailfishOS::WebEngineSettings *webEngineSettings = SailfishOS::

WebEngineSettings::instance();

// Use external Qt window for rendering content

webEngineSettings->setPreference(QString(

"gfx.compositor.external-window"), QVariant(true));

webEngineSettings->setPreference(QString(

"gfx.compositor.clear-context"), QVariant(false));

webEngineSettings->setPreference(QString(

"gfx.webrender.force-disabled"), QVariant(true));

webEngineSettings->setPreference(QString(

"embedlite.compositor.external_gl_context"), QVariant(true));

}

And this wasn't a change made by me:

$ git blame apps/core/declarativewebutils.cpp -L239,239

d8932efa1 src/browser/declarativewebutils.cpp (Raine Makelainen 2016-09-19 20:

16:59 +0300 239) webEngineSettings->setPreference(QString(

"embedlite.compositor.external_gl_context"), QVariant(true));

So the key methods that are of interest to us are WebEngineSettings::initialize() in the sailfish-components-webview project, since this is where I've set the value to false and DeclarativeWebUtils::setRenderingPreferences() in the sailfish-browser project, since this is where, historically, the value was always forced to true on browser start-up.It turns out the browser startup sequence is a bit messy. The sequence it goes through (along with a whole bunch of other stuff) is to first call initialize(). This method has a guard inside it to prevent the content of the method being called multiple times and which looks like this:

static bool isInitialized = false;

if (isInitialized) {

return;

}

The first time the method is called the isInitialized static variable is set to false and the contents of the method executes. As per our updated code this sets the embedlite.compositor.external_gl_context static preference to false. This is all fine for the WebView. In the case of the browser the setRenderingPreferences() method is called shortly after, setting the value to true. This is all present and correct.But now comes the catch. A little later on in the execution sequence initialize() is called again. At this point, were the isInitialized value set to true the method would return early and the contents of the method would be skipped. All would be good. But the problem is that it's not set to true, it's still set to false.

That's because the code that sets it to true is at the end of the method and on first execution the method is returning early due to a separate check; this one:

// Guard preferences that should be written only once. If a preference

// needs to be

// forcefully written upon each start that should happen before this.

QString appConfig = QStandardPaths::writableLocation(QStandardPaths::

AppDataLocation);

QFile markerFile(QString("%1/__PREFS_WRITTEN__").arg(appConfig));

if (markerFile.exists()) {

return;

}

This call causes the method to exit early so that the isInitialized variable never gets set.The fact it's exiting early here makes sense. But I don't think it makes sense for the isInitialized variable to be left as it is. I think it should be set to true even if the method exits early at this point.

In case you want to see the full gory details for yourself (trust me: you don't!), here's the step through that shows this sequence of events:

$ gdb sailfish-browser

[...]

(gdb) b DeclarativeWebUtils::setRenderingPreferences

Breakpoint 1 at 0x42c48: file ../core/declarativewebutils.cpp, line 233.

(gdb) b WebEngineSettings::initialize

Breakpoint 2 at 0x22ce4

(gdb) r

Starting program: /usr/bin/sailfish-browser

[...]

Thread 1 "sailfish-browse" hit Breakpoint 2, SailfishOS::

WebEngineSettings::initialize () at webenginesettings.cpp:96

96 if (isInitialized) {

(gdb) p isInitialized

$4 = false

(gdb) n

[...]

135 if (markerFile.exists()) {

(gdb)

136 return;

(gdb) c

Continuing.

Thread 1 "sailfish-browse" hit Breakpoint 1, DeclarativeWebUtils::

setRenderingPreferences (this=0x55556785f0) at ../core/

declarativewebutils.cpp:233

233 SailfishOS::WebEngineSettings *webEngineSettings = SailfishOS::

WebEngineSettings::instance();

(gdb) c

Continuing.

Thread 1 "sailfish-browse" hit Breakpoint 2, SailfishOS::

WebEngineSettings::initialize () at webenginesettings.cpp:96

96 if (isInitialized) {

(gdb) p isInitialized

$5 = false

(gdb) n

[...]

135 if (markerFile.exists()) {

(gdb)

136 return;

(gdb) c

Continuing.

[...]

At any rate, it looks like I have some kind of solution: set the preference in both places, but make sure the isInitialized value gets set correctly by moving the place it's set to above the condition that returns early. Tomorrow I'll install the packages with this change and give it a go.If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

23 Apr 2024 : Day 225 #

As we left things yesterday we were looking at nsWindow::GetNativeData() and the fact it does things the same way for both ESR 78 and ESR 91, simply returning GetGLContext() in response to a NS_NATIVE_OPENGL_CONTEXT parameter being passed in.

Interestingly, this call to GetGLContext() is exactly where we saw the GLContext being created on ESR 78 on day 223. So it's quite possible that both calls are going through on both versions, yet while it succeeds on ESR 78, it fails on ESR 91. It's not at all clear why that might be. Time to find out.

Crucially there is a difference between the code in GetGLContext() on ESR 78 compared to the code on ESR 91. Here's the very start of the ESR 78 method:

The value of the static pref is set to true by default, as we can see in the StaticPrefList.yaml file:

However, the next time I fire the browser up it crashes again. And when I check the preferences file I can see the value has switched back to false. That's because I've set it to do that in WebEngineSettings that's part of sailfish-components-webview:

But I obviously wasn't entirely comfortable with this at the time and went on to write the following:

But now I need the opposite, so for the time being I've disabled the forcing of this preference in sailfish-components-webview. That change necessitated (of course) a rebuild and reinstallation of the component.

The browser is now back up and running. Hooray! But the WebView now crashes before it even attempts to render a page. That's not unexpected and presumably will take us back to this failure to call PrepareOffscreen(). I'm going to investigate that further, but not today, it'll have to wait until tomorrow.

Even though this was one step backwards, one step forwards, it still feels like progress. We'll get there.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

Interestingly, this call to GetGLContext() is exactly where we saw the GLContext being created on ESR 78 on day 223. So it's quite possible that both calls are going through on both versions, yet while it succeeds on ESR 78, it fails on ESR 91. It's not at all clear why that might be. Time to find out.

Crucially there is a difference between the code in GetGLContext() on ESR 78 compared to the code on ESR 91. Here's the very start of the ESR 78 method:

GLContext*

nsWindow::GetGLContext() const

{

LOGT("this:%p, UseExternalContext:%d", this, sUseExternalGLContext);

if (sUseExternalGLContext) {

void* context = nullptr;

void* surface = nullptr;

void* display = nullptr;

[...]

Notice how the entrance to the main body of the function is gated by a variable called sUseExternalGLContext. In order for this method to return something non-null, it's essential that this is set to true. On ESR 91 this has changed from a variable to a static preference that looks like this:

GLContext*

nsWindow::GetGLContext() const

{

LOGT("this:%p, UseExternalContext:%d", this,

StaticPrefs::embedlite_compositor_external_gl_context());

if (StaticPrefs::embedlite_compositor_external_gl_context()) {

void* context = nullptr;

void* surface = nullptr;

void* display = nullptr;

[...]

This was actually a change I made myself and it's not really a very dramatic change at all. In ESR 78 the sUseExternalGLContext variable was being mirrored from a static preference, which is one that can be read very quickly, so there was no real reason to copy it into a variable. Hence I just switched out the variable with direct accesses of the static pref instead. That was all documented back on Day 97.The value of the static pref is set to true by default, as we can see in the StaticPrefList.yaml file:

# Make gecko compositor use GL context/surface provided by the application

- name: embedlite.compositor.external_gl_context

type: bool

value: true

mirror: always

However this value can be overidden by the preferences, stored in ~/.local/share/org.sailfishos/browser/.mozilla/prefs.js. Looking inside that file I see the following:

user_pref("embedlite.compositor.external_gl_context", false);I've switched that back to true and now, when I run the browser... woohoo! Rendering works again. Great! This is a huge relief. The onscreen rendering pipeline is still working just fine.

However, the next time I fire the browser up it crashes again. And when I check the preferences file I can see the value has switched back to false. That's because I've set it to do that in WebEngineSettings that's part of sailfish-components-webview:

// Ensure the renderer is configured correctly

engineSettings->setPreference(QStringLiteral(

"gfx.webrender.force-disabled"),

QVariant(true));

engineSettings->setPreference(QStringLiteral(

"embedlite.compositor.external_gl_context"),

QVariant(false));

And the reason for that was documented back on Day 165, where I wrote this:As we can see, it comes down to this embedlite.compositor.external_gl_context static preference, which needs to be set to false for the condition to be entered.

But I obviously wasn't entirely comfortable with this at the time and went on to write the following:

I'm going to set it to <tt>false</tt> explicitly for the WebView. But this

immediately makes me feel nervous: this setting isn't new and there's a

reason it's not being touched in the WebView code. It makes me think that

I'm travelling down a rendering pipeline path that I shouldn't be.

And so it panned out. Looming back at what I wrote then, the issue I was trying to address by flipping the static preference was the need to enter the condition in the following bit of code:

PLayerTransactionParent*

EmbedLiteCompositorBridgeParent::AllocPLayerTransactionParent(const

nsTArray<LayersBackend>& aBackendHints,

const LayersId&

aId)

{

PLayerTransactionParent* p =

CompositorBridgeParent::AllocPLayerTransactionParent(aBackendHints, aId);

EmbedLiteWindowParent *parentWindow = EmbedLiteWindowParent::From(mWindowId);

if (parentWindow) {

parentWindow->GetListener()->CompositorCreated();

}

if (!StaticPrefs::embedlite_compositor_external_gl_context()) {

// Prepare Offscreen rendering context

PrepareOffscreen();

}

return p;

}

I wanted PrepareOffscreen() to be called and negating this preference seemed the neatest and easiest way to make it happen.But now I need the opposite, so for the time being I've disabled the forcing of this preference in sailfish-components-webview. That change necessitated (of course) a rebuild and reinstallation of the component.

The browser is now back up and running. Hooray! But the WebView now crashes before it even attempts to render a page. That's not unexpected and presumably will take us back to this failure to call PrepareOffscreen(). I'm going to investigate that further, but not today, it'll have to wait until tomorrow.

Even though this was one step backwards, one step forwards, it still feels like progress. We'll get there.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

22 Apr 2024 : Day 224 #

Before we get started, a content warning. If you're a little squeamish or discussions about human illness make you uncomfortable, this might be one to skip.

Because today was not the day of development I was hoping it was going to be. Last night I found myself experiencing a bad case of food poisoning. I woke in the middle of the night, or maybe I never went to sleep, feeling deeply uncomfortable. After a long period of unrest I eventually found myself arched over the toilet bowl emptying the contents of my stomach into it.

I've spent the rest of the day recuperating, mostly sleeping, consuming only liquids (large quantities of Lucozade) and drifting in and out of consciousness. It seems like it was something I ate, which means it's just a case of waiting it out, but the lack of sleep, elevated temperature and general discomfort has left me in a state of hazy semi- coherence. Focusing has not really been an option.

After having spent the day like this it's now late evening and the chance for me to do much of anything let alone development, has sadly passed.

But I'm going to try to seize the moment nonetheless. There's one simple thing I think I can do that will help my task tomorrow. You'll recall that the question I need to answer is why the following is returning a value on ESR 78, but returning null on ESR 91:

The process for checking this is simple with the debugger: place a breakpoint on CompositorOGL::CreateContext() as before. When it hits, place a new breakpoint on every version of GetNativeData(), run the code onward and wait to see which one is hit first.

Here goes, first with ESR 78:

I've not made a lot of progress today, but perhaps that's to be expected. I'll continue following this lead up tomorrow.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

Because today was not the day of development I was hoping it was going to be. Last night I found myself experiencing a bad case of food poisoning. I woke in the middle of the night, or maybe I never went to sleep, feeling deeply uncomfortable. After a long period of unrest I eventually found myself arched over the toilet bowl emptying the contents of my stomach into it.

I've spent the rest of the day recuperating, mostly sleeping, consuming only liquids (large quantities of Lucozade) and drifting in and out of consciousness. It seems like it was something I ate, which means it's just a case of waiting it out, but the lack of sleep, elevated temperature and general discomfort has left me in a state of hazy semi- coherence. Focusing has not really been an option.

After having spent the day like this it's now late evening and the chance for me to do much of anything let alone development, has sadly passed.

But I'm going to try to seize the moment nonetheless. There's one simple thing I think I can do that will help my task tomorrow. You'll recall that the question I need to answer is why the following is returning a value on ESR 78, but returning null on ESR 91:

void* widgetOpenGLContext =

widget ? widget->GetNativeData(NS_NATIVE_OPENGL_CONTEXT) : nullptr;

The GetNativeData() method is an override, which means that there are potentially multiple different versions that might be being called when this bit of code is executed. The pointer to the method will be taken from the widget object's vtable. So it would be useful to know what method is actually being called on the two different versions of the browser.

The process for checking this is simple with the debugger: place a breakpoint on CompositorOGL::CreateContext() as before. When it hits, place a new breakpoint on every version of GetNativeData(), run the code onward and wait to see which one is hit first.

Here goes, first with ESR 78:

Thread 39 "Compositor" hit Breakpoint 1, mozilla::layers::

CompositorOGL::CreateContext (this=0x7ea0003420)

at gfx/layers/opengl/CompositorOGL.cpp:223

223 already_AddRefed<mozilla::gl::GLContext> CompositorOGL::CreateContext()

{

(gdb) b GetNativeData

Breakpoint 2 at 0x7fbbdbe5e0: GetNativeData. (5 locations)

(gdb) c

Continuing.

Thread 39 "Compositor" hit Breakpoint 2, mozilla::embedlite::nsWindow:

:GetNativeData (this=0x7f80c8d500, aDataType=12)

at mobile/sailfishos/embedshared/nsWindow.cpp:176

176 LOGT("t:%p, DataType: %i", this, aDataType);

(gdb) bt

#0 mozilla::embedlite::nsWindow::GetNativeData (this=0x7f80c8d500,

aDataType=12)

at mobile/sailfishos/embedshared/nsWindow.cpp:176

#1 0x0000007fba682718 in mozilla::layers::CompositorOGL::CreateContext (

this=0x7ea0003420)

at gfx/layers/opengl/CompositorOGL.cpp:228

#2 0x0000007fba6a33a4 in mozilla::layers::CompositorOGL::Initialize (

this=0x7ea0003420, out_failureReason=0x7edb30b720)

at gfx/layers/opengl/CompositorOGL.cpp:374

#3 0x0000007fba77aff4 in mozilla::layers::CompositorBridgeParent::

NewCompositor (this=this@entry=0x7f807596a0, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1534

#4 0x0000007fba784660 in mozilla::layers::CompositorBridgeParent::

InitializeLayerManager (this=this@entry=0x7f807596a0, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1491

#5 0x0000007fba7847a8 in mozilla::layers::CompositorBridgeParent::

AllocPLayerTransactionParent (this=this@entry=0x7f807596a0,

aBackendHints=..., aId=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1587

#6 0x0000007fbca81234 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

AllocPLayerTransactionParent (this=0x7f807596a0, aBackendHints=...,

aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:77

#7 0x0000007fba05f3f0 in mozilla::layers::PCompositorBridgeParent::

OnMessageReceived (this=0x7f807596a0, msg__=...) at

PCompositorBridgeParent.cpp:1391

[...]

#23 0x0000007fb735989c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb)

And then with ESR 91:

Thread 37 "Compositor" hit Breakpoint 1, mozilla::layers::

CompositorOGL::CreateContext (this=this@entry=0x7ed8002ed0)

at gfx/layers/opengl/CompositorOGL.cpp:227

227 already_AddRefed<mozilla::gl::GLContext> CompositorOGL::CreateContext()

{

(gdb) b GetNativeData

Breakpoint 2 at 0x7ff41fcef8: GetNativeData. (4 locations)

(gdb) c

Continuing.

Thread 37 "Compositor" hit Breakpoint 2, mozilla::embedlite::nsWindow:

:GetNativeData (this=0x7fc89ffc80, aDataType=12)

at mobile/sailfishos/embedshared/nsWindow.cpp:164

164 {

(gdb) bt

#0 mozilla::embedlite::nsWindow::GetNativeData (this=0x7fc89ffc80,

aDataType=12)

at mobile/sailfishos/embedshared/nsWindow.cpp:164

#1 0x0000007ff293aae4 in mozilla::layers::CompositorOGL::CreateContext (

this=this@entry=0x7ed8002ed0)

at gfx/layers/opengl/CompositorOGL.cpp:232

#2 0x0000007ff29503b8 in mozilla::layers::CompositorOGL::Initialize (

this=0x7ed8002ed0, out_failureReason=0x7fc17a8510)

at gfx/layers/opengl/CompositorOGL.cpp:397

#3 0x0000007ff2a66094 in mozilla::layers::CompositorBridgeParent::

NewCompositor (this=this@entry=0x7fc8a01850, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1493

#4 0x0000007ff2a71110 in mozilla::layers::CompositorBridgeParent::

InitializeLayerManager (this=this@entry=0x7fc8a01850, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1436

#5 0x0000007ff2a71240 in mozilla::layers::CompositorBridgeParent::

AllocPLayerTransactionParent (this=this@entry=0x7fc8a01850,

aBackendHints=..., aId=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1546

#6 0x0000007ff4e07ca8 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

AllocPLayerTransactionParent (this=0x7fc8a01850, aBackendHints=...,

aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:80

#7 0x0000007ff23fe52c in mozilla::layers::PCompositorBridgeParent::

OnMessageReceived (this=0x7fc8a01850, msg__=...) at

PCompositorBridgeParent.cpp:1285

[...]

#22 0x0000007fefba889c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb)

So in both cases the class that the GetNativeData() method is being called from is mozilla::embedlite::nsWindow::GetNativeData. Looking at the code for this method, there's a pretty svelte switch statement inside that depends on the aDataType value being passed in. In our case this value is always NS_NATIVE_OPENGL_CONTEXT. And the code for that case is pretty simple:

case NS_NATIVE_OPENGL_CONTEXT: {

MOZ_ASSERT(!GetParent());

return GetGLContext();

}

This is the same for both ESR 78 and ESR 91, so to get to the bottom of this will require digging a bit deeper. But at least we're one step further along.

I've not made a lot of progress today, but perhaps that's to be expected. I'll continue following this lead up tomorrow.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

21 Apr 2024 : Day 223 #

I do love travelling by train. A big part of the reason for this is the freedom it gives me to do other things while I'm travelling. Cars and busses simply can't offer that same empowerment. But even then travelling by train isn't the same as sitting at a desk that's static relative to the motion of the surface of the earth. Debugging multiple phones simultaneously just isn't very convenient on a train.

And so now here I am, back home at my desk, and able to properly perform the debugging I attempted to do yesterday while hurtling between Birmingham and Cambridge at 120 km per hour. It also helps that I'm not feeling quite so exhausted this morning after a long day either.

The question I'm asking is, when using the browser, which of the routes I identified yesterday are followed when creating the GLContext object, since this is where the offscreen status is set and stored.

Using the debugger it's easy to find where this is happening on ESR 78:

Both backtraces have CompositorOGL::CreateContext() in them at frame 4 and 6 respectively, so that's presumably where the decision to go one way or the other is happening. In the ESR 78 executable nsWindow::GetGLContext() is being called from there, whilst in ESR 91 it's GLContextProviderEGL::CreateHeadless().

So the difference here appears to be that on ESR 91 the following condition is entered:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

And so now here I am, back home at my desk, and able to properly perform the debugging I attempted to do yesterday while hurtling between Birmingham and Cambridge at 120 km per hour. It also helps that I'm not feeling quite so exhausted this morning after a long day either.

The question I'm asking is, when using the browser, which of the routes I identified yesterday are followed when creating the GLContext object, since this is where the offscreen status is set and stored.

Using the debugger it's easy to find where this is happening on ESR 78:

Thread 39 "Compositor" hit Breakpoint 1, mozilla::gl::GLContext::

GLContext (this=this@entry=0x7ea01118b0, flags=mozilla::gl::

CreateContextFlags::NONE,

caps=..., sharedContext=sharedContext@entry=0x0, isOffscreen=false,

useTLSIsCurrent=useTLSIsCurrent@entry=false)

at gfx/gl/GLContext.cpp:274

274 GLContext::GLContext(CreateContextFlags flags, const SurfaceCaps& caps,

(gdb) bt

#0 mozilla::gl::GLContext::GLContext (this=this@entry=0x7ea01118b0,

flags=mozilla::gl::CreateContextFlags::NONE, caps=...,

sharedContext=sharedContext@entry=0x0, isOffscreen=false,

useTLSIsCurrent=useTLSIsCurrent@entry=false)

at gfx/gl/GLContext.cpp:274

#1 0x0000007fba607af0 in mozilla::gl::GLContextEGL::GLContextEGL (

this=0x7ea01118b0, egl=0x7ea0110db0, flags=<optimized out>, caps=...,

isOffscreen=<optimized out>, config=0x0, surface=0x5555cc3980,

context=0x7ea0004d80)

at gfx/gl/GLContextProviderEGL.cpp:472

#2 0x0000007fba60f0d8 in mozilla::gl::GLContextProviderEGL::

CreateWrappingExisting (aContext=0x7ea0004d80, aSurface=0x5555cc3980,

aDisplay=<optimized out>)

at /home/flypig/Documents/Development/jolla/gecko-dev-project/gecko-dev/

obj-build-mer-qt-xr/dist/include/mozilla/cxxalloc.h:33

#3 0x0000007fbca9a388 in mozilla::embedlite::nsWindow::GetGLContext (

this=0x7f80945810)

at mobile/sailfishos/embedshared/nsWindow.cpp:415

#4 0x0000007fba682718 in mozilla::layers::CompositorOGL::CreateContext (

this=0x7ea0003420)

at gfx/layers/opengl/CompositorOGL.cpp:228

#5 0x0000007fba6a33a4 in mozilla::layers::CompositorOGL::Initialize (

this=0x7ea0003420, out_failureReason=0x7edb32d720)

at gfx/layers/opengl/CompositorOGL.cpp:374

#6 0x0000007fba77aff4 in mozilla::layers::CompositorBridgeParent::

NewCompositor (this=this@entry=0x7f808cbf80, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1534

#7 0x0000007fba784660 in mozilla::layers::CompositorBridgeParent::

InitializeLayerManager (this=this@entry=0x7f808cbf80, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1491

#8 0x0000007fba7847a8 in mozilla::layers::CompositorBridgeParent::

AllocPLayerTransactionParent (this=this@entry=0x7f808cbf80,

aBackendHints=..., aId=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1587

#9 0x0000007fbca81234 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

AllocPLayerTransactionParent (this=0x7f808cbf80, aBackendHints=...,

aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:77

#10 0x0000007fba05f3f0 in mozilla::layers::PCompositorBridgeParent::

OnMessageReceived (this=0x7f808cbf80, msg__=...) at

PCompositorBridgeParent.cpp:1391

[...]

#26 0x0000007fb735989c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb)

So this is the case of a call to CreateWrappingExisting():

already_AddRefed<GLContext> GLContextProviderEGL::CreateWrappingExisting(

void* aContext, void* aSurface, void* aDisplay) {

[...]

RefPtr<GLContextEGL> gl =

new GLContextEGL(egl, CreateContextFlags::NONE, caps, false, config,

(EGLSurface)aSurface, (EGLContext)aContext);

[...]

On ESR 91 the situation is that we have a call to GLContextEGL::CreateEGLPBufferOffscreenContextImpl():

Thread 37 "Compositor" hit Breakpoint 1, mozilla::gl::GLContext::

GLContext (this=this@entry=0x7f6019aa80, desc=...,

sharedContext=sharedContext@entry=0x0,

useTLSIsCurrent=useTLSIsCurrent@entry=false)

at gfx/gl/GLContext.cpp:283

283 GLContext::GLContext(const GLContextDesc& desc, GLContext*

sharedContext,

(gdb) bt

#0 mozilla::gl::GLContext::GLContext (this=this@entry=0x7f6019aa80, desc=...,

sharedContext=sharedContext@entry=0x0,

useTLSIsCurrent=useTLSIsCurrent@entry=false)

at gfx/gl/GLContext.cpp:283

#1 0x0000007ff28a9450 in mozilla::gl::GLContextEGL::GLContextEGL (

this=0x7f6019aa80,

egl=std::shared_ptr<mozilla::gl::EglDisplay> (use count 5, weak count 2) =

{...}, desc=..., config=0x5555ab3f60, surface=0x7f60004bb0,

context=0x7f60004c30)

at gfx/gl/GLContextProviderEGL.cpp:496

#2 0x0000007ff28cfd18 in mozilla::gl::GLContextEGL::CreateGLContext (egl=std::

shared_ptr<mozilla::gl::EglDisplay> (use count 5, weak count 2) = {...},

desc=..., config=<optimized out>, config@entry=0x5555ab3f60,

surface=surface@entry=0x7f60004bb0, useGles=useGles@entry=true,

out_failureId=out_failureId@entry=0x7fb179c1b8)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/cxxalloc.h:33

#3 0x0000007ff28d0858 in mozilla::gl::GLContextEGL::

CreateEGLPBufferOffscreenContextImpl (

egl=std::shared_ptr<mozilla::gl::EglDisplay> (use count 5, weak count 2) =

{...}, desc=..., size=..., useGles=useGles@entry=true,

out_failureId=out_failureId@entry=0x7fb179c1b8)

at include/c++/8.3.0/ext/atomicity.h:96

#4 0x0000007ff28d0a6c in mozilla::gl::GLContextEGL::

CreateEGLPBufferOffscreenContext (

display=std::shared_ptr<mozilla::gl::EglDisplay> (use count 5, weak count

2) = {...}, desc=..., size=...,

out_failureId=out_failureId@entry=0x7fb179c1b8)

at include/c++/8.3.0/ext/atomicity.h:96

#5 0x0000007ff28d0ba0 in mozilla::gl::GLContextProviderEGL::CreateHeadless (

desc=..., out_failureId=out_failureId@entry=0x7fb179c1b8)

at include/c++/8.3.0/ext/atomicity.h:96

#6 0x0000007ff293abc0 in mozilla::layers::CompositorOGL::CreateContext (

this=this@entry=0x7f60002ed0)

at gfx/layers/opengl/CompositorOGL.cpp:254

#7 0x0000007ff29503b8 in mozilla::layers::CompositorOGL::Initialize (

this=0x7f60002ed0, out_failureReason=0x7fb179c510)

at gfx/layers/opengl/CompositorOGL.cpp:397

#8 0x0000007ff2a66094 in mozilla::layers::CompositorBridgeParent::

NewCompositor (this=this@entry=0x7fc89abd50, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1493

#9 0x0000007ff2a71110 in mozilla::layers::CompositorBridgeParent::

InitializeLayerManager (this=this@entry=0x7fc89abd50, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1436

#10 0x0000007ff2a71240 in mozilla::layers::CompositorBridgeParent::

AllocPLayerTransactionParent (this=this@entry=0x7fc89abd50,

aBackendHints=..., aId=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1546

#11 0x0000007ff4e07ca8 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

AllocPLayerTransactionParent (this=0x7fc89abd50, aBackendHints=...,

aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:80

#12 0x0000007ff23fe52c in mozilla::layers::PCompositorBridgeParent::

OnMessageReceived (this=0x7fc89abd50, msg__=...) at

PCompositorBridgeParent.cpp:1285

[...]

#27 0x0000007fefba889c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb)

These differences are bigger than expected; I'll need to realign them.Both backtraces have CompositorOGL::CreateContext() in them at frame 4 and 6 respectively, so that's presumably where the decision to go one way or the other is happening. In the ESR 78 executable nsWindow::GetGLContext() is being called from there, whilst in ESR 91 it's GLContextProviderEGL::CreateHeadless().

So the difference here appears to be that on ESR 91 the following condition is entered:

// Allow to create offscreen GL context for main Layer Manager

if (!context && gfxEnv::LayersPreferOffscreen()) {

[...]

On ESR 78 it's never reached. The reason becomes clear as I step through the code. The value of the widgetOpenGLContext variable that's collected from the widget is non-null, causing the method to exit early:

Thread 39 "Compositor" hit Breakpoint 1, mozilla::layers::

CompositorOGL::CreateContext (this=0x7ea0003420)

at gfx/layers/opengl/CompositorOGL.cpp:223

223 already_AddRefed<mozilla::gl::GLContext> CompositorOGL::CreateContext()

{

(gdb) n

227 nsIWidget* widget = mWidget->RealWidget();

(gdb) p context

$1 = {mRawPtr = 0x0}

(gdb) n

228 void* widgetOpenGLContext =

(gdb) p widget

$2 = (nsIWidget *) 0x7f80c86f60

(gdb) n

230 if (widgetOpenGLContext) {

(gdb) p widgetOpenGLContext

$3 = (void *) 0x7ea0111800

(gdb)

As on ESR 78, on ESR 91 the value of context is set to null, while widget has a non-null value. But unlike on ESR 78 the widgetOpenGLContext that's retrieved from widget is null:

Thread 37 "Compositor" hit Breakpoint 1, mozilla::layers::

CompositorOGL::CreateContext (this=this@entry=0x7ed8002ed0)

at gfx/layers/opengl/CompositorOGL.cpp:227

227 already_AddRefed<mozilla::gl::GLContext> CompositorOGL::CreateContext()

{

(gdb) n

231 nsIWidget* widget = mWidget->RealWidget();

(gdb) p context

$3 = {mRawPtr = 0x0}

(gdb) n

232 void* widgetOpenGLContext =

(gdb) p widget

$4 = (nsIWidget *) 0x7fc8550d80

(gdb) n

234 if (widgetOpenGLContext) {

(gdb) p widgetOpenGLContext

$5 = (void *) 0x0

(gdb)

As a consequence of this the method doesn't return early and the context is created via a different route as a result. So the question we have to answer is why the following is returning a value on ESR 78 but returning null on ESR 91:

void* widgetOpenGLContext =

widget ? widget->GetNativeData(NS_NATIVE_OPENGL_CONTEXT) : nullptr;

Finding out the answer to this will have to wait until tomorrow, when I have a bit more time in which I'll hopefully be able to get this sorted.If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

20 Apr 2024 : Day 222 #

I'm travelling for work at the moment. Last night I stayed at an easyHotel. I'm a fan of the easyHotel concept: clean, cheap and well-located, but also tiny and basic. The fact that having a window is an optional paid-for extra tells you everything you need to know about the chain. There was just enough room around the edge of the bed to squeeze a human leg or two, but certainly no space for a desk. This presented me with a problem when it came to leaving my laptop running. I needed to have the build I started yesterday run overnight. Sending my laptop to sleep wasn't an option.

For a while I contemplated leaving it running on the bed next to me, but I had visions of waking up in the middle of night with my bed on fire or, worse, with my laptop shattered into little pieces having been thrown to the floor.

Eventually the very-helpful hotel staff found me a mini ironing board. After removing the cover it made a passable laptop rack. And so this morning I find myself in a coffee shop in the heart of Birmingham (which is itself the heart of the UK) with a freshly minted set of packages ready to try out on my phone.

The newly installed debug-symbol adorned binaries immediately deliver.

But, my time in this coffee shop is coming to an end, so that will be a task for my journey back to Cambridge this evening on the train.

[...]

I'm on the train again, heading back to Cambridge. My next task is to step through the CompositeToDefaultTarget() method on ESR 78. The question I want to know the answer to is whether it's making the call to context->OffscreenSize() or not.

Brace yourself for a lengthy debugging step-through. As I've typically come to say, feel free to skip this without losing the context.

Great! This is something with a very clear cause and which it should be possible to find a sensible fix for.

On ESR 91 the initial value of this flag is set to false:

This gives me something to dig into further tomorrow; but my train having arrived I'm going to have to leave it there for today. More tomorrow!

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

For a while I contemplated leaving it running on the bed next to me, but I had visions of waking up in the middle of night with my bed on fire or, worse, with my laptop shattered into little pieces having been thrown to the floor.

Eventually the very-helpful hotel staff found me a mini ironing board. After removing the cover it made a passable laptop rack. And so this morning I find myself in a coffee shop in the heart of Birmingham (which is itself the heart of the UK) with a freshly minted set of packages ready to try out on my phone.

The newly installed debug-symbol adorned binaries immediately deliver.

Thread 36 "Compositor" received signal SIGSEGV, Segmentation fault.

[Switching to Thread 0x7f39d297d0 (LWP 31614)]

0x0000007ff28a8978 in mozilla::gl::GLScreenBuffer::Size (this=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h:290

290 ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h: No

such file or directory.

(gdb) bt

#0 0x0000007ff28a8978 in mozilla::gl::GLScreenBuffer::Size (this=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h:290

#1 mozilla::gl::GLContext::OffscreenSize (this=this@entry=0x7ee019aa30)

at gfx/gl/GLContext.cpp:2141

#2 0x0000007ff4e07264 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

CompositeToDefaultTarget (this=0x7fc8b77bc0, aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:156

#3 0x0000007ff2a578d8 in mozilla::layers::CompositorVsyncScheduler::

ForceComposeToTarget (this=0x7fc8ca6a10, aTarget=aTarget@entry=0x0,

aRect=aRect@entry=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/layers/LayersTypes.h:

82

#4 0x0000007ff2a57934 in mozilla::layers::CompositorBridgeParent::

ResumeComposition (this=this@entry=0x7fc8b77bc0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/RefPtr.h:313

#5 0x0000007ff2a579c0 in mozilla::layers::CompositorBridgeParent::

ResumeCompositionAndResize (this=0x7fc8b77bc0, x=<optimized out>,

y=<optimized out>,

width=<optimized out>, height=<optimized out>)

at gfx/layers/ipc/CompositorBridgeParent.cpp:794

#6 0x0000007ff2a5055c in mozilla::detail::RunnableMethodArguments<int, int,

int, int>::applyImpl<mozilla::layers::CompositorBridgeParent, void (mozilla:

:layers::CompositorBridgeParent::*)(int, int, int, int),

StoreCopyPassByConstLRef<int>, StoreCopyPassByConstLRef<int>,

StoreCopyPassByConstLRef<int>, StoreCopyPassByConstLRef<int>, 0ul, 1ul,

2ul, 3ul> (args=..., m=<optimized out>, o=<optimized out>)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/nsThreadUtils.h:1151

[...]

#18 0x0000007fefba889c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb)

The basis of the problem is that GLContext::OffscreenSize() is calling Size() on a value of GLScreenBuffer mScreen which is set to null:

const gfx::IntSize& GLContext::OffscreenSize() const {

MOZ_ASSERT(IsOffscreen());

return mScreen->Size();

}

Checking the diff, this GLContext::OffscreenSize() method was added by me as part of the recent shake-up of the offscreen render code. Here's the change I made in EmbedLiteCompositorBridgeParent.cpp that's making this call:

@@ -151,8 +153,7 @@ EmbedLiteCompositorBridgeParent::CompositeToDefaultTarget(

VsyncId aId)

if (context->IsOffscreen()) {

MutexAutoLock lock(mRenderMutex);

- if (context->GetSwapChain()->OffscreenSize() != mEGLSurfaceSize

- && !context->GetSwapChain()->Resize(mEGLSurfaceSize)) {

+ if (context->OffscreenSize() != mEGLSurfaceSize &&

!context->ResizeOffscreen(mEGLSurfaceSize)) {

return;

}

}

As you can see CompositeToDefaultTarget() isn't new. It's the way its resizing the screen that's causing problems. Interestingly this change has actually reverted back to the code as it is in ESR 78. So that means I can step through the ESR 78 version to compare the two.But, my time in this coffee shop is coming to an end, so that will be a task for my journey back to Cambridge this evening on the train.

[...]

I'm on the train again, heading back to Cambridge. My next task is to step through the CompositeToDefaultTarget() method on ESR 78. The question I want to know the answer to is whether it's making the call to context->OffscreenSize() or not.

Brace yourself for a lengthy debugging step-through. As I've typically come to say, feel free to skip this without losing the context.

Thread 40 "Compositor" hit Breakpoint 1, mozilla::embedlite::

EmbedLiteCompositorBridgeParent::CompositeToDefaultTarget (

this=0x7f80a77420, aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:137

137 {

(gdb) n

138 const CompositorBridgeParent::LayerTreeState* state =

CompositorBridgeParent::GetIndirectShadowTree(RootLayerTreeId());

(gdb) n

139 NS_ENSURE_TRUE(state && state->mLayerManager, );

(gdb) n

141 GLContext* context = static_cast<CompositorOGL*>(

state->mLayerManager->GetCompositor())->gl();

(gdb) n

142 NS_ENSURE_TRUE(context, );

(gdb) n

143 if (!context->IsCurrent()) {

(gdb) n

146 NS_ENSURE_TRUE(context->IsCurrent(), );

(gdb) p context->mIsOffscreen

$1 = false

(gdb) n

3566 /home/flypig/Documents/Development/jolla/gecko-dev-project/gecko-dev/

obj-build-mer-qt-xr/dist/include/GLContext.h: No such file or directory.

(gdb) n

156 ScopedScissorRect

Thread 37 "Compositor" hit Breakpoint 1, mozilla::embedlite::

EmbedLiteCompositorBridgeParent::CompositeToDefaultTarget (

this=0x7fc8a01040, aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:143

143 {

(gdb) n

144 const CompositorBridgeParent::LayerTreeState* state =

CompositorBridgeParent::GetIndirectShadowTree(RootLayerTreeId());

(gdb) n

145 NS_ENSURE_TRUE(state && state->mLayerManager, );

(gdb) n

147 GLContext* context = static_cast<CompositorOGL*>(

state->mLayerManager->GetCompositor())->gl();

(gdb) n

148 NS_ENSURE_TRUE(context, );

(gdb) n

149 if (!context->IsCurrent()) {

(gdb) n

152 NS_ENSURE_TRUE(context->IsCurrent(), );

(gdb) p context->mDesc.isOffscreen

$2 = true

(gdb) n

3598 ${PROJECT}/obj-build-mer-qt-xr/dist/include/GLContext.h: No such file

or directory.

(gdb) n

155 MutexAutoLock lock(mRenderMutex);

(gdb) n

156 if (context->OffscreenSize() != mEGLSurfaceSize &&

!context->ResizeOffscreen(mEGLSurfaceSize)) {

(gdb) n

Thread 37 "Compositor" received signal SIGSEGV, Segmentation fault.

0x0000007ff28a8978 in mozilla::gl::GLScreenBuffer::Size (this=0x0)

at ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h:290

290 ${PROJECT}/obj-build-mer-qt-xr/dist/include/mozilla/UniquePtr.h: No

such file or directory.

(gdb)

So, for once, this is just as expected. On ESR 78 context->IsOffscreen() is returning false (as it should do), whereas on ESR 91 it's returning true.Great! This is something with a very clear cause and which it should be possible to find a sensible fix for.

On ESR 91 the initial value of this flag is set to false:

struct GLContextDesc final : public GLContextCreateDesc {

bool isOffscreen = false;

};

The only place it gets set to true is in the GLContextEGL::CreateEGLPBufferOffscreenContextImpl() method:

auto fullDesc = GLContextDesc{desc};

fullDesc.isOffscreen = true;

In contrast on ESR 78 it's set in the constructor:

GLContext::GLContext(CreateContextFlags flags, const SurfaceCaps& caps,

GLContext* sharedContext, bool isOffscreen,

bool useTLSIsCurrent)

: mUseTLSIsCurrent(ShouldUseTLSIsCurrent(useTLSIsCurrent)),

mIsOffscreen(isOffscreen),

[...]

This constructor is called in two places. In GLContextEGL():

already_AddRefed<GLContextEGL> GLContextEGL::CreateGLContext(

GLLibraryEGL* const egl, CreateContextFlags flags, const SurfaceCaps& caps,

bool isOffscreen, EGLConfig config, EGLSurface surface, const bool useGles,

nsACString* const out_failureId) {

[...]

RefPtr<GLContextEGL> glContext =

new GLContextEGL(egl, flags, caps, isOffscreen, config, surface, context);

[...]

And in CreateWrappingExisting():

already_AddRefed<GLContext> GLContextProviderEGL::CreateWrappingExisting(

void* aContext, void* aSurface, void* aDisplay) {

[...]

RefPtr<GLContextEGL> gl =

new GLContextEGL(egl, CreateContextFlags::NONE, caps, false, config,

(EGLSurface)aSurface, (EGLContext)aContext);

[...]

The latter always ends up setting it to false as you can see. The former can also come from that route, but could also be arrived at via different routes, all of them in GLContextProviderEGL.cpp. It could be called from GLContextEGLFactory::CreateImpl() where it's always set to false:

already_AddRefed<GLContext> GLContextEGLFactory::CreateImpl(

EGLNativeWindowType aWindow, bool aWebRender, bool aUseGles) {

[...]

RefPtr<GLContextEGL> gl = GLContextEGL::CreateGLContext(

egl, flags, caps, false, config, surface, aUseGles, &discardFailureId);

[...]

Or it could be called from GLContextEGL::CreateEGLPBufferOffscreenContextImpl() where it's always set to true:

/*static*/

already_AddRefed<GLContextEGL>

GLContextEGL::CreateEGLPBufferOffscreenContextImpl(

CreateContextFlags flags, const mozilla::gfx::IntSize& size,

const SurfaceCaps& minCaps, bool aUseGles,

nsACString* const out_failureId) {

[...]

RefPtr<GLContextEGL> gl = GLContextEGL::CreateGLContext(

egl, flags, configCaps, true, config, surface, aUseGles, out_failureId);

[...]

The last of these is the only place where it can be set to true. So that's the one we have to focus on. Either there needs to be some more refined logic in there, or it shouldn't be going down that route at all.This gives me something to dig into further tomorrow; but my train having arrived I'm going to have to leave it there for today. More tomorrow!

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

19 Apr 2024 : Day 221 #

This morning I started looking at why the sailfish-browser has been broken by my changes to the offscreen rendering process. The approach I'm using is to look at the intersection of:

The first place I started looking was the CompositorOGL::CreateContext() method. This is the last method that gets called by sailfish-browser before the behaviour diverges from what I'd expect it to (as we saw yesterday). Plus I made very specific changes to this, as you can see from this portion of the diff:

Now when I transfer it over to my phone the browser crashes with a segmentation fault as soon as the page is loaded. I feel like I've been here before! Because it takes so long to transfer the libxul.so output from the partial build over to my phone I stripped it of debugging symbols before the transfer. This makes a huge difference to its size of the library (stripping out around 3 GiB of data to leave just 100 MiB of code). Unfortunately that means I can't now find out where or why the crash is occurring.

Even worse, performing another partial rebuild doesn't seem to restore the debug symbols. So the only thing for me to do is a full rebuild, which is an overnight job.

I'll do that, but in the meantime I'll continue browsing through the diff in case a reason jumps out at me, or there's something obvious that needs changing.

Things will get a bit messy the more I change, but the beauty of these diaries is that I'll be keeping a full record. So it should all be clear what gets changed and why.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

- Parts of the code I've changed (as shown by the diff generated by git).

- Parts of the code that are touched by sailfish-browser (as indicated by gdb backtraces).

The first place I started looking was the CompositorOGL::CreateContext() method. This is the last method that gets called by sailfish-browser before the behaviour diverges from what I'd expect it to (as we saw yesterday). Plus I made very specific changes to this, as you can see from this portion of the diff:

$ git diff -- gfx/layers/opengl/CompositorOGL.cpp

diff --git a/gfx/layers/opengl/CompositorOGL.cpp b/gfx/layers/opengl/

CompositorOGL.cpp

index 8a423b840dd5..11105c77c43b 100644

--- a/gfx/layers/opengl/CompositorOGL.cpp

+++ b/gfx/layers/opengl/CompositorOGL.cpp

@@ -246,12 +246,14 @@ already_AddRefed<mozilla::gl::GLContext> CompositorOGL::

CreateContext() {

// Allow to create offscreen GL context for main Layer Manager

if (!context && gfxEnv::LayersPreferOffscreen()) {

+ SurfaceCaps caps = SurfaceCaps::ForRGB();

+ caps.preserve = false;

+ caps.bpp16 = gfxVars::OffscreenFormat() == SurfaceFormat::R5G6B5_UINT16;

+

nsCString discardFailureId;

- context = GLContextProvider::CreateHeadless(

- {CreateContextFlags::REQUIRE_COMPAT_PROFILE}, &discardFailureId);

- if (!context->CreateOffscreenDefaultFb(mSurfaceSize)) {

- context = nullptr;

- }

+ context = GLContextProvider::CreateOffscreen(

+ mSurfaceSize, caps, CreateContextFlags::REQUIRE_COMPAT_PROFILE,

+ &discardFailureId);

}

[...]

I've reversed this change and performed a partial build.Now when I transfer it over to my phone the browser crashes with a segmentation fault as soon as the page is loaded. I feel like I've been here before! Because it takes so long to transfer the libxul.so output from the partial build over to my phone I stripped it of debugging symbols before the transfer. This makes a huge difference to its size of the library (stripping out around 3 GiB of data to leave just 100 MiB of code). Unfortunately that means I can't now find out where or why the crash is occurring.

Even worse, performing another partial rebuild doesn't seem to restore the debug symbols. So the only thing for me to do is a full rebuild, which is an overnight job.

I'll do that, but in the meantime I'll continue browsing through the diff in case a reason jumps out at me, or there's something obvious that needs changing.

Things will get a bit messy the more I change, but the beauty of these diaries is that I'll be keeping a full record. So it should all be clear what gets changed and why.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

18 Apr 2024 : Day 220 #

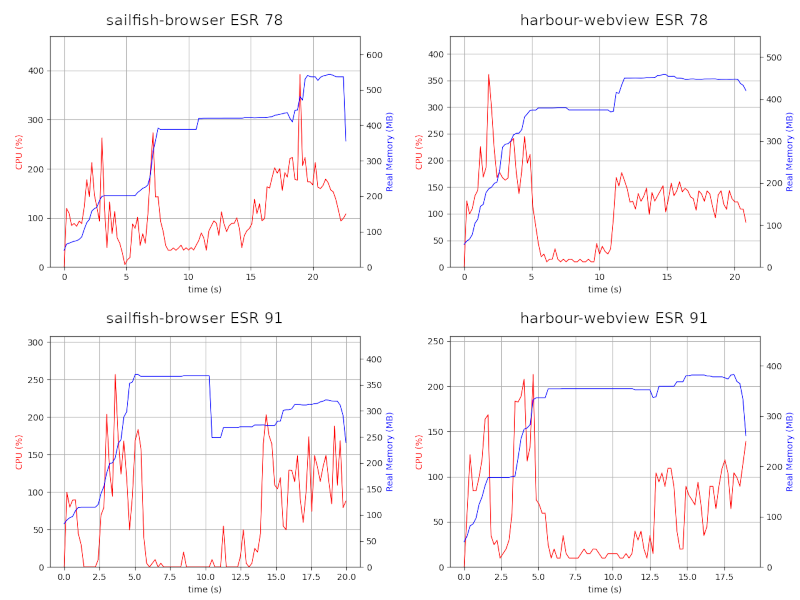

My first task today is to figure out when and how often DeclarativeWebContainer::clearWindowSurface() gets called on ESR 78. As I discovered yesterday it's called only once on the latest ESR 91 build and that's the same build where things are broken now even for the sailfish-browser. So my hope is that this will lead to some insight as to why it's broken.

My suspicion is that it should be called often. In fact, each time the screen is updated. If that's the case then it will give me a clear issue to track down: why is it not being called every update on ESR 91.

For context, recall that DeclarativeWebContainer::clearWindowSurface() is not part of the gecko code itself, but rather part of the sailfish-browser code.

So, I'm firing up the debugger to find out.

Here are some of the calls and signals which potentially could result in a call to clearWindowSurface(). Not all of these are actually happening during the runs I've been testing:

What happens is that there's a break in the call stack. While the following aren't called:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

My suspicion is that it should be called often. In fact, each time the screen is updated. If that's the case then it will give me a clear issue to track down: why is it not being called every update on ESR 91.

For context, recall that DeclarativeWebContainer::clearWindowSurface() is not part of the gecko code itself, but rather part of the sailfish-browser code.

So, I'm firing up the debugger to find out.

$ gdb sailfish-browser

[...]

(gdb) b DeclarativeWebContainer::clearWindowSurface

Breakpoint 1 at 0x3c750: file ../core/declarativewebcontainer.cpp, line 681.

(gdb) r

Starting program: /usr/bin/sailfish-browser

[...]

Created LOG for EmbedLite

[...]

Thread 1 "sailfish-browse" hit Breakpoint 1, DeclarativeWebContainer::

clearWindowSurface (this=0x55559bbc60) at ../core/

declarativewebcontainer.cpp:681

681 m_context->makeCurrent(this);

(gdb) bt

#0 DeclarativeWebContainer::clearWindowSurface (this=0x55559bbc60) at ../core/

declarativewebcontainer.cpp:681

#1 0x0000005555594e68 in DeclarativeWebContainer::exposeEvent (

this=0x55559bbc60) at ../core/declarativewebcontainer.cpp:815

#2 0x0000007fb83603dc in QWindow::event(QEvent*) () from /usr/lib64/

libQt5Gui.so.5

#3 0x0000005555594b8c in DeclarativeWebContainer::event (this=0x55559bbc60,

event=0x7fffffecf8) at ../core/declarativewebcontainer.cpp:770

#4 0x0000007fb7941144 in QCoreApplication::notify(QObject*, QEvent*) () from /

usr/lib64/libQt5Core.so.5

#5 0x0000007fb79412e8 in QCoreApplication::notifyInternal2(QObject*, QEvent*) (

) from /usr/lib64/libQt5Core.so.5

#6 0x0000007fb8356488 in QGuiApplicationPrivate::processExposeEvent(

QWindowSystemInterfacePrivate::ExposeEvent*) () from /usr/lib64/

libQt5Gui.so.5

#7 0x0000007fb83570b4 in QGuiApplicationPrivate::processWindowSystemEvent(

QWindowSystemInterfacePrivate::WindowSystemEvent*) ()

from /usr/lib64/libQt5Gui.so.5

#8 0x0000007fb83356e4 in QWindowSystemInterface::sendWindowSystemEvents(

QFlags<QEventLoop::ProcessEventsFlag>) () from /usr/lib64/libQt5Gui.so.5

#9 0x0000007faf65ac4c in ?? () from /usr/lib64/libQt5WaylandClient.so.5

#10 0x0000007fb70dfd34 in g_main_context_dispatch () from /usr/lib64/

libglib-2.0.so.0

#11 0x0000007fb70dffa0 in ?? () from /usr/lib64/libglib-2.0.so.0

#12 0x0000007fb70e0034 in g_main_context_iteration () from /usr/lib64/

libglib-2.0.so.0

#13 0x0000007fb7993a90 in QEventDispatcherGlib::processEvents(QFlags<QEventLoop:

:ProcessEventsFlag>) () from /usr/lib64/libQt5Core.so.5

#14 0x0000007fb793f608 in QEventLoop::exec(QFlags<QEventLoop::

ProcessEventsFlag>) () from /usr/lib64/libQt5Core.so.5

#15 0x0000007fb79471d4 in QCoreApplication::exec() () from /usr/lib64/

libQt5Core.so.5

#16 0x000000555557b360 in main (argc=<optimized out>, argv=<optimized out>) at

main.cpp:201

(gdb) c

Continuing.

[...]

Created LOG for EmbedLiteLayerManager

[...]

Thread 38 "Compositor" hit Breakpoint 1, DeclarativeWebContainer::

clearWindowSurface (this=0x55559bbc60) at ../core/

declarativewebcontainer.cpp:681

681 m_context->makeCurrent(this);

(gdb) bt

#0 DeclarativeWebContainer::clearWindowSurface (this=0x55559bbc60) at ../core/

declarativewebcontainer.cpp:681

#1 0x0000005555591910 in DeclarativeWebContainer::createGLContext (

this=0x55559bbc60) at ../core/declarativewebcontainer.cpp:1193

#2 0x0000007fb796b204 in QMetaObject::activate(QObject*, int, int, void**) ()

from /usr/lib64/libQt5Core.so.5

#3 0x0000007fbfb9f5c8 in QMozWindowPrivate::RequestGLContext (

this=0x5555b81290, context=@0x7edb46b2f0: 0x0, surface=@0x7edb46b2f8: 0x0,

display=@0x7edb46b300: 0x0) at qmozwindow_p.cpp:133

#4 0x0000007fbca9a374 in mozilla::embedlite::nsWindow::GetGLContext (

this=0x7f80ce9e90)

at mobile/sailfishos/embedshared/nsWindow.cpp:408

#5 0x0000007fba682718 in mozilla::layers::CompositorOGL::CreateContext (

this=0x7e98003420)

at gfx/layers/opengl/CompositorOGL.cpp:228

#6 0x0000007fba6a33a4 in mozilla::layers::CompositorOGL::Initialize (

this=0x7e98003420, out_failureReason=0x7edb46b720)

at gfx/layers/opengl/CompositorOGL.cpp:374

#7 0x0000007fba77aff4 in mozilla::layers::CompositorBridgeParent::

NewCompositor (this=this@entry=0x7f80ce94a0, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1534

#8 0x0000007fba784660 in mozilla::layers::CompositorBridgeParent::

InitializeLayerManager (this=this@entry=0x7f80ce94a0, aBackendHints=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1491

#9 0x0000007fba7847a8 in mozilla::layers::CompositorBridgeParent::

AllocPLayerTransactionParent (this=this@entry=0x7f80ce94a0,

aBackendHints=..., aId=...)

at gfx/layers/ipc/CompositorBridgeParent.cpp:1587

#10 0x0000007fbca81234 in mozilla::embedlite::EmbedLiteCompositorBridgeParent::

AllocPLayerTransactionParent (this=0x7f80ce94a0, aBackendHints=...,

aId=...)

at mobile/sailfishos/embedthread/EmbedLiteCompositorBridgeParent.cpp:77

#11 0x0000007fba05f3f0 in mozilla::layers::PCompositorBridgeParent::

OnMessageReceived (this=0x7f80ce94a0, msg__=...) at

PCompositorBridgeParent.cpp:1391

[...]

#27 0x0000007fb735989c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb) c

Continuing.

[...]

Thread 38 "Compositor" hit Breakpoint 1, DeclarativeWebContainer::

clearWindowSurface (this=0x55559bbc60) at ../core/

declarativewebcontainer.cpp:681

681 m_context->makeCurrent(this);

(gdb) bt

#0 DeclarativeWebContainer::clearWindowSurface (this=0x55559bbc60) at ../core/

declarativewebcontainer.cpp:681

#1 0x0000005555591830 in DeclarativeWebContainer::clearWindowSurfaceTask (

data=0x55559bbc60) at ../core/declarativewebcontainer.cpp:671

#2 0x0000007fbca81e10 in details::CallFunction<0ul, void (*)(void*), void*> (

arg=..., function=<optimized out>)

at ipc/chromium/src/base/task.h:52

#3 DispatchTupleToFunction<void (*)(void*), void*> (arg=...,

function=<optimized out>)

at ipc/chromium/src/base/task.h:53

#4 RunnableFunction<void (*)(void*), mozilla::Tuple<void*> >::Run (

this=<optimized out>)

at ipc/chromium/src/base/task.h:324

#5 0x0000007fb9bfe4e4 in nsThread::ProcessNextEvent (aResult=0x7edb46be77,

aMayWait=<optimized out>, this=0x7f80cc0580)

at xpcom/threads/nsThread.cpp:1211

[...]

#15 0x0000007fb735989c in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/

clone.S:78

(gdb) c

Continuing.

[...]

It turns out I'm wrong about this DeclarativeWebContainer::clearWindowSurface() method. It gets called three times as the page is loading and that's it. Checking the code, it's triggered by signals that come from qtmozembed. These are, in order:

- DeclarativeWebContainer::exposeEvent().

- DeclarativeWebContainer::createGLContext() triggered by the QMozWindow::requestGLContext() signal.

- WebPageFactory::aboutToInitialize() on page creation.

Here are some of the calls and signals which potentially could result in a call to clearWindowSurface(). Not all of these are actually happening during the runs I've been testing:

DeclarativeWebContainer::exposeEvent()

connect(m_mozWindow.data(), &QMozWindow::requestGLContext, this,

&DeclarativeWebContainer::createGLContext, Qt::DirectConnection);

connect(m_mozWindow.data(), &QMozWindow::compositorCreated, this,

&DeclarativeWebContainer::postClearWindowSurfaceTask);

connect(m_model.data(), &DeclarativeTabModel::tabClosed, this,

&DeclarativeWebContainer::releasePage);

DeclarativeWebContainer::clearSurface()

On ESR 91 only one of these is happening: the first one triggered by a call to exposeEvent(). So what of the others? Placing breakpoints in various places I'm able to identify that CompositorOGL::CreateContext() is called on ESR 91. This appears in the call stack of the second breakpoint hit on ESR 78, so this should in theory also be calling clearWindowSurface() on ESR 91, but it's not.What happens is that there's a break in the call stack. While the following aren't called:

#0 clearWindowSurface() at ../core/declarativewebcontainer.cpp:681 #1 createGLContext() at ../core/declarativewebcontainer.cpp:1193 #2 QMetaObject::activate() /usr/lib64/libQt5Core.so.5 #3 QMozWindowPrivate::RequestGLContext() at qmozwindow_p.cpp:133 #4 GetGLContext() mobile/sailfishos/embedshared/nsWindow.cpp:408The following are called. These are all prior to the others in the callstack on ESR 78, which means that the break is happening in the CompositorOGL::CreateContext() method.

#5 CompositorOGL::CreateContext() gfx/layers/opengl/CompositorOGL.cpp:228 #6 CompositorOGL::Initialize() gfx/layers/opengl/CompositorOGL.cpp:374 #7 NewCompositor() gfx/layers/ipc/CompositorBridgeParent.cpp:1534 #8 InitializeLayerManager() gfx/layers/ipc/CompositorBridgeParent.cpp:1491So tomorrow I'll need to take a look at the CompositorOGL::CreateContext() code. Based on what I've seen today, something different is likely to be happening in there compared to what was happening with the working version. By looking at the diff and seeing what's changed, it should be possible to figure out what. More on this tomorrow.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

17 Apr 2024 : Day 219 #

Today has been a bit of a disheartening day from a Gecko perspective. So disheartening in fact that I considered pausing this blog, taking some time off and then trying to fix it in private to avoid having to admit how badly things are going right now.

But it's important to show the downs as well as the ups. Software development can be a messy business at times, things rarely go to plan and even when they do there's still often an awful lot of angst and frustration preceding the enjoyment of getting something working.

So here it is, warts and all.

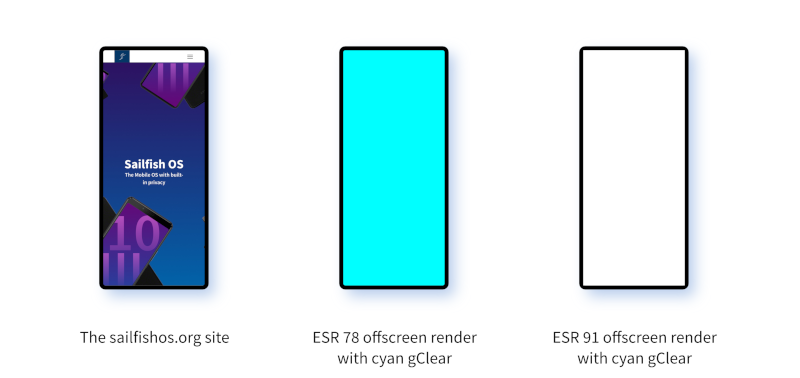

Overnight I rebuilt the packages with the new patches installed. Running the WebView showed no changes in the output: still a white screen, no page rendering or coloured backgrounds. I can live with that, it's not what I wanted but it's also no worse than before.

So I decided to head off in the direction that was set last Thursday when I laid plans to check the implementation that happens between the DeclarativeWebContainer::clearWindowSurface() method and the CompositorOGL::EndFrame() method. This direction is in response to the useful discussion in last week's Sailfish Community Meeting.

First up I wanted to establish what was happening on the window side, so starting at clearWindowSurface() I added some code first to change the colour used to clear the texture from white to green.

This didn't affect the rendering, which remains white. Okay, so far so normal. That's a little unexpected, but nevertheless adds new and useful information.

I then added some code to read off the colour at the centre of the texture. This is pretty simple code and, as before, I added some debug output lines so we can find out what's going wrong. Here's what the method looks like now:

Executing this I was surprised to find that there was no new output from this. So I tried to set a breakpoint on it, but gdb claims it doesn't exist in the binary. A bit of reflection made me realise why: this is code in sailfish-browser, which forms part of the browser, not the WebView.

So for the WebView I'll need to find the similar equivalent call. Before trying to find out where the correct call lives, I thought I'd first see what happens when I run the browser instead of the WebView. Will gdb have more luck with that?

And this is where things start to go wrong. When running with the browser the method is called once. The screen turns green. But there's no other rendering. The fact the screen goes green is good. The fact there's no other rendering is bad. Very bad.

It means that over the last few weeks while I've been trying to fix the WebView render pipeline, I've been doing damage to the browser render pipeline in the process. I honestly thought they didn't interact at this level, but it turns out I was wrong.

So now I have both a broken WebView and a broken browser to fix. Grrrr.

While I was initially quite downhearted about this, on reflection it's not quite as bad as it sounds. The working browser version is still safely stored in the repository, so if necessary I can just revert back. But I've also got all of the changes on top of this easily visible using git locally on my system. So I can at least now work through the changes to see whether I can figure out what's responsible.

I'm not going to make more progress on this tonight, so I'll have to return to it tomorrow to try to understand what I've broken.

So very frustrating. But that's software development for you.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

But it's important to show the downs as well as the ups. Software development can be a messy business at times, things rarely go to plan and even when they do there's still often an awful lot of angst and frustration preceding the enjoyment of getting something working.

So here it is, warts and all.

Overnight I rebuilt the packages with the new patches installed. Running the WebView showed no changes in the output: still a white screen, no page rendering or coloured backgrounds. I can live with that, it's not what I wanted but it's also no worse than before.

So I decided to head off in the direction that was set last Thursday when I laid plans to check the implementation that happens between the DeclarativeWebContainer::clearWindowSurface() method and the CompositorOGL::EndFrame() method. This direction is in response to the useful discussion in last week's Sailfish Community Meeting.

First up I wanted to establish what was happening on the window side, so starting at clearWindowSurface() I added some code first to change the colour used to clear the texture from white to green.

This didn't affect the rendering, which remains white. Okay, so far so normal. That's a little unexpected, but nevertheless adds new and useful information.

I then added some code to read off the colour at the centre of the texture. This is pretty simple code and, as before, I added some debug output lines so we can find out what's going wrong. Here's what the method looks like now:

void DeclarativeWebContainer::clearWindowSurface()

{

Q_ASSERT(m_context);

// The GL context should always be used from the same thread in which it

was created.

Q_ASSERT(m_context->thread() == QThread::currentThread());

m_context->makeCurrent(this);

QOpenGLFunctions_ES2* funcs =

m_context->versionFunctions<QOpenGLFunctions_ES2>();

Q_ASSERT(funcs);

funcs->glClearColor(0.0, 1.0, 0.0, 0.0);

funcs->glClear(GL_COLOR_BUFFER_BIT);

m_context->swapBuffers(this);

QSize screenSize = QGuiApplication::primaryScreen()->size();

size_t bufferSize = screenSize.width() * screenSize.height() * 4;

uint8_t* buf = static_cast<uint8_t*>(calloc(sizeof(uint8_t), bufferSize));

funcs->glReadPixels(0, 0, screenSize.width(), screenSize.height(),

GL_RGBA, GL_UNSIGNED_BYTE, buf);

int xpos = screenSize.width() / 2;

int ypos = screenSize.height() / 2;

int pos = xpos * ypos * 4;

volatile char red = buf[pos];

volatile char green = buf[pos + 1];

volatile char blue = buf[pos + 2];

volatile char alpha = buf[pos + 3];