Gecko-dev Diary

Starting in August 2023 I'll be upgrading the Sailfish OS browser from Gecko version ESR 78 to ESR 91. This page catalogues my progress.

Latest code changes are in the gecko-dev sailfishos-esr91 branch.

There is an index of all posts in case you want to jump to a particular day.

Gecko

5 most recent items

3 May 2024 : Day 235 #

As discussed at the start of the week, this is going to be my last developer diary post for a little bit. But I want to make absolutely clear that this is a temporary pause. I'm heading to the HPC Days Conference in Durham next week where I'll be giving a talk on Matching AI Research to HPC Resource. I'm expecting it to be a packed schedule and, as is often the case with this kind of event, I'm not expecting to be able to fit in my usual gecko work alongside this. So there will be a pause, but I'll be back on the 18th May to restart from where I'm leaving things today.

During the pause I'm hoping write up some of the blog posts that I've put on hold while working on the gecko engine, so things may not be completely silent around here. But the key message I want to get across is that I'm not abandoning gecko. Not at all. I'll be back to it in no time.

Nevertheless, as I write this I'm still frustrated and stumped by my lack of progress with the offscreen rendering. So I'm also hoping that a break will give me the chance to come up with some alternative approaches. Over the last couple of days I attempted to capture the contents of the surface used to transfer the offscreen texture onscreen, but the results were inconclusive at best.

On the forums I received helpful advice from Tone (tortoisedoc). Tone highlighted the various areas where texture decoding can go awry:

These are all great points and great questions. Although I know what sort of image I'm asking for (either RGB or RGBA in UNSIGNED_BYTE format) the results don't seem to be matching that. It's made more complex by the fact that the outline of the image is there (which suggests things like start and stride are correct) but still many of the pixels are just blank. It's somewhat baffling and I think the reason might be more to do with an image that doesn't exist rather than an image which is being generated in the wrong format.

But I could be wrong and I'll continue to consider these possibilities and try to figure out the underlying reason. I really appreciate the input and as always it gives me more go to on.

But today I've shifted focus just briefly by giving the code a last review before the pause: sifting through the code again to try to spot differences.

There were many places I thought there might be differences, such as the fact that the it wasn't clear to me that the GLContext->mDesc.isOffscreen flag was being set. But stepping through the application in the debugger showed it was set after all. So no fix to be had there.

The only difference I can see — and it's too small to make a difference I'm sure — is that the Surface capabilities are set at slightly different places. It's possible that in the gap between where it's set in ESR 78 and the place it's set slightly later in ESR 91, something makes use of it and a difference results.

I'm not convinced to be honest, but to avoid even the slightest doubt I've updated the code so that the values are now set explicitly:

And I was right: no improvements after this change. Argh. How frustrating.

I'll continue looking through the fine details of the code. There has to be a difference in here that I'm missing. And while it doesn't make for stimulating diary entries, just sitting and sifting through the code seems like an essential task nonetheless.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

If you've got this far, then not only do you have my respect and thanks, but I'd also urge you to return on the 18th May when I'll be picking things up from where I've left them today.

Comment

During the pause I'm hoping write up some of the blog posts that I've put on hold while working on the gecko engine, so things may not be completely silent around here. But the key message I want to get across is that I'm not abandoning gecko. Not at all. I'll be back to it in no time.

Nevertheless, as I write this I'm still frustrated and stumped by my lack of progress with the offscreen rendering. So I'm also hoping that a break will give me the chance to come up with some alternative approaches. Over the last couple of days I attempted to capture the contents of the surface used to transfer the offscreen texture onscreen, but the results were inconclusive at best.

On the forums I received helpful advice from Tone (tortoisedoc). Tone highlighted the various areas where texture decoding can go awry:

two things can go wrong (assuming the data is correct in the texture):

Is the image the same the browser would show? Which image are you displaying in the embedded view?

- alignment of data (start, stride)

- format of pixel (ARGB / RGBA etc)

Is the image the same the browser would show? Which image are you displaying in the embedded view?

These are all great points and great questions. Although I know what sort of image I'm asking for (either RGB or RGBA in UNSIGNED_BYTE format) the results don't seem to be matching that. It's made more complex by the fact that the outline of the image is there (which suggests things like start and stride are correct) but still many of the pixels are just blank. It's somewhat baffling and I think the reason might be more to do with an image that doesn't exist rather than an image which is being generated in the wrong format.

But I could be wrong and I'll continue to consider these possibilities and try to figure out the underlying reason. I really appreciate the input and as always it gives me more go to on.

But today I've shifted focus just briefly by giving the code a last review before the pause: sifting through the code again to try to spot differences.

There were many places I thought there might be differences, such as the fact that the it wasn't clear to me that the GLContext->mDesc.isOffscreen flag was being set. But stepping through the application in the debugger showed it was set after all. So no fix to be had there.

The only difference I can see — and it's too small to make a difference I'm sure — is that the Surface capabilities are set at slightly different places. It's possible that in the gap between where it's set in ESR 78 and the place it's set slightly later in ESR 91, something makes use of it and a difference results.

I'm not convinced to be honest, but to avoid even the slightest doubt I've updated the code so that the values are now set explicitly:

GLContext::GLContext(const GLContextDesc& desc, GLContext* sharedContext,

bool useTLSIsCurrent)

: mDesc(desc),

mUseTLSIsCurrent(ShouldUseTLSIsCurrent(useTLSIsCurrent)),

mDebugFlags(ChooseDebugFlags(mDesc.flags)),

mSharedContext(sharedContext),

mWorkAroundDriverBugs(

StaticPrefs::gfx_work_around_driver_bugs_AtStartup()) {

mCaps.any = true;

mCaps.color = true;

[...]

}

[...]

bool GLContext::InitImpl() {

[...]

// TODO: Remove SurfaceCaps::any.

if (mCaps.any) {

mCaps.any = false;

mCaps.color = true;

mCaps.alpha = false;

}

[...]

The updated code is built and right now transferring over to my development phone. As I say though, this doesn't look especially promising to me. I'm not holding my breath for a successful render.And I was right: no improvements after this change. Argh. How frustrating.

I'll continue looking through the fine details of the code. There has to be a difference in here that I'm missing. And while it doesn't make for stimulating diary entries, just sitting and sifting through the code seems like an essential task nonetheless.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

If you've got this far, then not only do you have my respect and thanks, but I'd also urge you to return on the 18th May when I'll be picking things up from where I've left them today.

2 May 2024 : Day 234 #

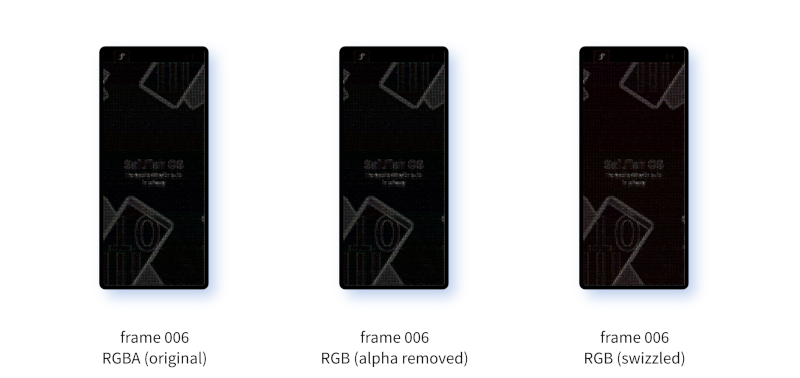

Yesterday I added code to the build to try to capture the image from the surface texture that's supposed to be used for rendering to the screen. I got poor results with it though: the colours are wrong and the images look corrupted somehow.

So today I've been playing around with the data to try to figure out why. One possibility that sprang to mind is that potentially the data needs swizzling. Swizzling is the act of rearranging pixels, components or bits within the components. One possibility is that the data is being read little-endian (or big-endian) but GIMP is interpreting it as big-endian (or little-endian). So reversing the endianness might help.

In order to check this I've written a simple Python script that allows me to rearrange bits and bytes within the image in a simplified way. I just have to run the image through the script:

The version without the alpha channel was created using a change to the gecko code by setting the texture format to RGB. I did this because I was concerned all of the zeroed-out values (which appear as black pixels in the image) might have been somehow related to this. But as you can see, the result is identical to generating an RGBA texture and then using the Python script to remove the alpha channel.

It's hard to see the difference between the first two versions and the third, which has the bit direction reversed. The colours are actually different, but because black remains black even after swizzling, the overall darkness of the image remains.

As you can see we get very similar results irrespective of these changes. When we look at the data using a hex editor we can see why. The majority of the entries are zero, which is why we're getting such a preponderance of black in the images:

Swizzling then doesn't appear to be the answer. Another possibility, I thought, might be that I'm using the wrong parameters for the call to raw_fReadPixels() when actually requesting the data. Maybe these parameters have to match the underlying texture?

To test this out I've tried to determine the values that are used when the surface textures are created. I used the debugger for this. But I thought I'd also take the opportunity to check that we have the same values for ESR 78 and ESR 91. So first off, this is what I get when I check ESR 78:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

So today I've been playing around with the data to try to figure out why. One possibility that sprang to mind is that potentially the data needs swizzling. Swizzling is the act of rearranging pixels, components or bits within the components. One possibility is that the data is being read little-endian (or big-endian) but GIMP is interpreting it as big-endian (or little-endian). So reversing the endianness might help.

In order to check this I've written a simple Python script that allows me to rearrange bits and bytes within the image in a simplified way. I just have to run the image through the script:

#!/bin/python3

import sys

def swizzle(byte):

result = 0

for pos in range(8):

result |= ((byte & (1 << pos)) >> pos) << (7 - pos)

return result

def convert(filein, fileout):

with open(filein, "rb") as fin:

data = fin.read(-1)

with open(fileout, "wb") as fout:

for pos in range(0, len(data), 4):

r1, g1, b1, a1 = data[pos:pos + 4]

# Rearrange the bits and bytes

r2 = swizzle(r1)

g2 = swizzle(g1)

b2 = swizzle(b1)

fout.write(r2.to_bytes(1, 'little'))

fout.write(g2.to_bytes(1, 'little'))

fout.write(b2.to_bytes(1, 'little'))

if len(sys.argv) != 3:

print("Please provide input and output filenames")

else:

filein = sys.argv[1]

fileout = sys.argv[2]

print(f"Converting from: {filein}")

print(f"Converting to: {fileout}")

convert(filein, fileout)

print(f"Done")

After passing the images through this script and rearranging the data in as many ways as I can think of, I'm still left with a very messy result. The two new versions I created are one with just the alpha channel removed; and a second which also reverses the bits in each byte. Here are the three variants I'm left with:- frame060-00.data: Original file (RGBA).

- frame060-01.data: Alpha removed (RGB).

- frame060-02.data: Alpha removed and swizzled (RGB).

The version without the alpha channel was created using a change to the gecko code by setting the texture format to RGB. I did this because I was concerned all of the zeroed-out values (which appear as black pixels in the image) might have been somehow related to this. But as you can see, the result is identical to generating an RGBA texture and then using the Python script to remove the alpha channel.

It's hard to see the difference between the first two versions and the third, which has the bit direction reversed. The colours are actually different, but because black remains black even after swizzling, the overall darkness of the image remains.

As you can see we get very similar results irrespective of these changes. When we look at the data using a hex editor we can see why. The majority of the entries are zero, which is why we're getting such a preponderance of black in the images:

$ hexdump -vC -s 0x1e0 -n 0x090 frame060-00.data | less 000001e0 ff 03 04 ff 0f 00 00 ff 00 01 00 ff 00 00 f0 ff |................| 000001f0 00 00 00 ff 00 00 00 ff 00 00 00 ff 00 00 00 ff |................| 00000200 ff 03 04 ff 0f 00 00 ff 80 00 00 ff 00 00 d0 ff |................| 00000210 00 00 00 ff 00 00 00 ff 00 00 00 ff 00 00 00 ff |................| 00000220 00 00 00 ff 00 00 00 ff 00 00 00 ff 00 00 00 ff |................| 00000230 00 00 00 ff 00 00 00 ff 00 00 00 ff 00 00 00 ff |................| 00000240 ff 03 04 ff 0f 00 00 ff 00 01 00 ff 00 00 e0 ff |................| 00000250 00 00 00 ff 00 00 00 ff 00 00 00 ff 00 00 00 ff |................| 00000260 00 00 00 ff 00 00 00 ff 00 00 00 ff 00 00 00 ff |................| 00000270 $ hexdump -vC -s 0x1e0 -n 0x090 frame060-01.data | less 000001e0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 000001f0 00 00 00 00 00 00 00 00 ff 03 04 0f 00 00 00 01 |................| 00000200 00 00 00 d0 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000210 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000220 00 00 00 00 00 00 00 00 ff 03 04 0f 00 00 80 00 |................| 00000230 00 00 00 d0 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000240 ff 03 04 0f 00 00 00 01 00 00 00 e0 00 00 00 00 |................| 00000250 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000260 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000270 $ hexdump -vC -s 0x1e0 -n 0x090 frame060-02.data | less 000001e0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 000001f0 00 00 00 00 00 00 00 00 ff c0 20 f0 00 00 00 80 |.......... .....| 00000200 00 00 00 0b 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000210 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000220 00 00 00 00 00 00 00 00 ff c0 20 f0 00 00 01 00 |.......... .....| 00000230 00 00 00 0b 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000240 ff c0 20 f0 00 00 00 80 00 00 00 07 00 00 00 00 |.. .............| 00000250 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000260 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000270Inverting the colours doesn't help: we just end up with a predominance of white. It looks more like only certain points in the texture are being exported.

Swizzling then doesn't appear to be the answer. Another possibility, I thought, might be that I'm using the wrong parameters for the call to raw_fReadPixels() when actually requesting the data. Maybe these parameters have to match the underlying texture?

To test this out I've tried to determine the values that are used when the surface textures are created. I used the debugger for this. But I thought I'd also take the opportunity to check that we have the same values for ESR 78 and ESR 91. So first off, this is what I get when I check ESR 78:

Thread 37 "Compositor" hit Breakpoint 3, mozilla::gl::

SharedSurface_Basic::Create (gl=0x7eac109140, formats=..., size=...,

hasAlpha=false)

at gfx/gl/SharedSurfaceGL.cpp:24

24 bool hasAlpha) {

(gdb) n

25 UniquePtr<SharedSurface_Basic> ret;

(gdb) p formats

$1 = (const mozilla::gl::GLFormats &) @0x7eac00595c: {color_texInternalFormat =

6407, color_texFormat = 6407, color_texType = 5121,

color_rbFormat = 32849, depthStencil = 35056, depth = 33190, stencil = 36168}

(gdb) p size

$2 = (const mozilla::gfx::IntSize &) @0x7eac003564: {<mozilla::gfx::

BaseSize<int, mozilla::gfx::IntSizeTyped<mozilla::gfx::UnknownUnits> >> =

{{{

width = 1080, height = 2520}, components = {1080, 2520}}}, <mozilla::

gfx::UnknownUnits> = {<No data fields>}, <No data fields>}

(gdb) p hasAlpha

$3 = false

(gdb) p gl

$4 = (mozilla::gl::GLContext *) 0x7eac109140

(gdb)

And here's the check for ESR 91. The details all looks identical to me:

Thread 37 "Compositor" hit Breakpoint 3, mozilla::gl::

SharedSurface_Basic::Create (gl=0x7ee019aa50, formats=..., size=...,

hasAlpha=false)

at gfx/gl/SharedSurfaceGL.cpp:59

59 bool hasAlpha) {

(gdb) n

60 UniquePtr<SharedSurface_Basic> ret;

(gdb) p formats

$4 = (const mozilla::gl::GLFormats &) @0x7ee00044e4: {color_texInternalFormat =

6407, color_texFormat = 6407, color_texType = 5121,

color_rbFormat = 32849, depthStencil = 35056, depth = 33190, stencil = 36168}

(gdb) p size

$5 = (const mozilla::gfx::IntSize &) @0x7f1f92effc: {<mozilla::gfx::

BaseSize<int, mozilla::gfx::IntSizeTyped<mozilla::gfx::UnknownUnits> >> =

{{{

width = 1080, height = 2520}, components = {1080, 2520}}}, <mozilla::

gfx::UnknownUnits> = {<No data fields>}, <No data fields>}

(gdb) p hasAlpha

$6 = false

(gdb) p gl

$7 = (mozilla::gl::GLContext *) 0x7ee019aa50

(gdb)

The actual format is specified in GLContext::ChooseGLFormats(). Here are the values taken from the debugger:

{

color_texInternalFormat = 6407,

color_texFormat = 6407,

color_texType = 5121,

color_rbFormat = 32849,

depthStencil = 35056,

depth = 33190,

stencil = 36168

}

Checking these against the appropriate enums, these values are equivalent to the following:

{

color_texInternalFormat = LOCAL_GL_RGB,

color_texFormat = LOCAL_GL_RGB,

color_texType = LOCAL_GL_UNSIGNED_BYTE,

color_rbFormat = LOCAL_GL_RGB8,

depthStencil = LOCAL_GL_DEPTH24_STENCIL8,

depth = LOCAL_GL_DEPTH_COMPONENT24,

stencil = LOCAL_GL_STENCIL_INDEX8

}

I've used these values for the parameters to ReadPixles(), including removing the alpha channel. But sadly the results are practically identical. Here's the new output generated during the capture for reference:

frame000.data: Colour before: (0, 0, 0), 1 frame001.data: Colour before: (0, 0, 0), 1 frame002.data: Colour before: (0, 0, 0), 1 frame003.data: Colour before: (0, 0, 0), 1 frame004.data: Colour before: (208, 175, 205), 1 frame005.data: Colour before: (39, 0, 0), 1 frame006.data: Colour before: (67, 115, 196), 1 frame007.data: Colour before: (0, 0, 0), 1 frame008.data: Colour before: (0, 0, 0), 1 frame009.data: Colour before: (157, 149, 42), 1 frame010.data: Colour before: (0, 0, 0), 1 frame011.data: Colour before: (0, 32, 12), 1 frame012.data: Colour before: (71, 118, 198), 1 frame013.data: Colour before: (0, 0, 0), 1 frame014.data: Colour before: (0, 0, 0), 1This is all interesting stuff, but it doesn't seem to get us any closer to what we were hoping for, which is supposed to be a copy of the image that ought to be shown on the screen. It's all been a bit of an unproductive diversion. I'll have to try to make more progress on that tomorrow.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

1 May 2024 : Day 233 #

Before I get in to my diary entry today I want to add a reminder that I'll not be posting diaries next week, or the week after. Next week I'll be attending a conference and I need a bit of time to sort out a few other things in my wider life. So this will give me the chance to do that. But this is only a temporary gap; I'll be back straight after to continue where I left off.

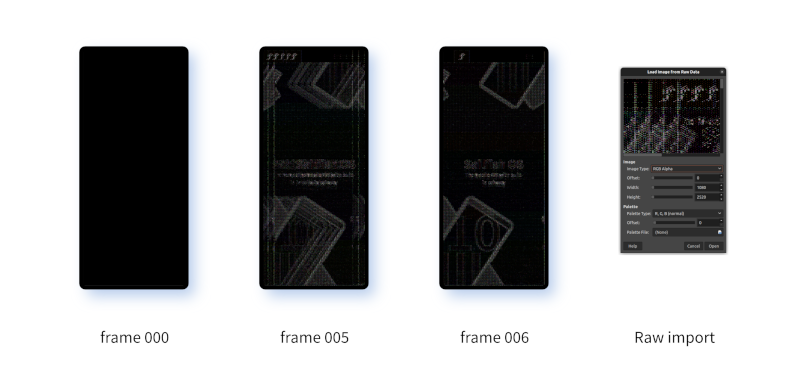

With that out of the way, let's get on with the entry for today. If you've read any of my diary entries over the last few days you'll know I've been trying to extract something useful from the GLScreenBuffer::Swap() method. I added some code in to the method to read off pixel data from the render surface which generated some plausible looking output.

But I wasn't totally convinced: it looked to me like there were too many zero entries that I couldn't explain

So today I've been trying to do a couple of things. First I tried to get something to compare against; second I tried to make the code that captures the colour at a point a little more general by also saving out the entire image buffer to disk.

Let's tackle these in order. The obvious way to get something to compare against is to add the same code to the ESR 78 library and try running that. And this is exactly what I've been doing. The surrounding code in ESR 91 is almost identical to that in ESR 78, so it's a pretty straightforward task to just copy over the code changes from ESR 91 to ESR 78.

Having done this, built the library and deployed it to my phone, here's what's output when I now execute the browser:

This has left me more confused than enlightened, so I've gone on to implement the second idea as well: exporting the image data to file so I can check what the image actually looks like.

Once again the code for this is pretty straightforward. All I'm doing is taking a copy of the entire surface, rather than just one pixel of it. This is provided as a contiguous block of memory: a raw buffer with the pixel values stored in it. So I'm just dumping this out to a file. To avoid completely thrashing my phone I've set it up to output an image only once in every ten renders. The filenames increment each time an image is exported, so I should capture several steps as the page completes is render.

Here's the code I'm using for this, added to the GLScreenBuffer::Swap() method. This is hacky code at best, but it's also temporary, so I'm not too concerned about the style here. Something quick and dirty is fine, as long as... well, as long as it works!

After copying the frame data from my phone over to my laptop I'm able to load them into GIMP (the GNU Image Manipulation Package) using the raw plugin. This is activated for files with a .data extension and allows the data to be loaded in as if it were pure pixel data without any header or metadata. Because there's no header you have to specify certain parameters manually, such as the width, height and format of the image data.

I always forget exactly what the dimensions of the screens on my development Xperia 10 II devices are, but thankfully GSMArena is only a few clicks away to check:

Adding the dimensions into the raw data loader seems to do the trick.

The code I added to gecko requested a texture format of RGBA, so that's what I need to use when loading the data in. Sadly the results are not what I had hoped. There are clearly some data related to the render and it's worth noting that the buffer where these are stored is initialised to contain zeroes each frame, so the data is real, not just artefacts from the memory or previous render.

But most of the pixels are black, the colours are wrong and the images seem to be mangled in very strange ways as you can see in the screenshots.

It's too late for me to figure this out tonight so I'll have a think about it overnight and come back to it in the morning.

As always, if you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

With that out of the way, let's get on with the entry for today. If you've read any of my diary entries over the last few days you'll know I've been trying to extract something useful from the GLScreenBuffer::Swap() method. I added some code in to the method to read off pixel data from the render surface which generated some plausible looking output.

But I wasn't totally convinced: it looked to me like there were too many zero entries that I couldn't explain

So today I've been trying to do a couple of things. First I tried to get something to compare against; second I tried to make the code that captures the colour at a point a little more general by also saving out the entire image buffer to disk.

Let's tackle these in order. The obvious way to get something to compare against is to add the same code to the ESR 78 library and try running that. And this is exactly what I've been doing. The surrounding code in ESR 91 is almost identical to that in ESR 78, so it's a pretty straightforward task to just copy over the code changes from ESR 91 to ESR 78.

Having done this, built the library and deployed it to my phone, here's what's output when I now execute the browser:

=============== Preparing offscreen rendering context =============== Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 [...] Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (45, 91, 86, 255), 1 Colour after: (45, 91, 86, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (128, 13, 160, 255), 1 Colour after: (128, 13, 160, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 Colour before: (0, 0, 0, 255), 1 Colour after: (0, 0, 0, 255), 1 [...]There are a couple of lines of interesting output here, but most of them just show black pixels and nothing else. That's not ideal. To be clear, this is output from a WebView app that is fully working. So I'd have expected to see a lot more colour data coming out in the debug output.

This has left me more confused than enlightened, so I've gone on to implement the second idea as well: exporting the image data to file so I can check what the image actually looks like.

Once again the code for this is pretty straightforward. All I'm doing is taking a copy of the entire surface, rather than just one pixel of it. This is provided as a contiguous block of memory: a raw buffer with the pixel values stored in it. So I'm just dumping this out to a file. To avoid completely thrashing my phone I've set it up to output an image only once in every ten renders. The filenames increment each time an image is exported, so I should capture several steps as the page completes is render.

Here's the code I'm using for this, added to the GLScreenBuffer::Swap() method. This is hacky code at best, but it's also temporary, so I'm not too concerned about the style here. Something quick and dirty is fine, as long as... well, as long as it works!

static int count = 0;

static int filecount = 0;

size_t bufferSize;

uint8_t* buf;

bool result;

int xpos;

int ypos;

int pos;

volatile char red;

volatile char green;

volatile char blue;

volatile char alpha;

if (count % 10 == 0) {

//bool GLScreenBuffer::ReadPixels(GLint x, GLint y, GLsizei width, GLsizei

height, GLenum format, GLenum type, GLvoid* pixels)

bufferSize = sizeof(char) * size.width * size.height * 4;

buf = static_cast<uint8_t*>(calloc(sizeof(uint8_t), bufferSize));

result = ReadPixels(0, 0, size.width, size.height, LOCAL_GL_RGBA,

LOCAL_GL_UNSIGNED_BYTE, buf);

xpos = size.width / 2;

ypos = size.height / 2;

pos = (xpos + (size.width * ypos)) * 4;

red = buf[pos];

green = buf[pos + 1];

blue = buf[pos + 2];

alpha = buf[pos + 3];

printf_stderr("Colour before: (%d, %d, %d, %d), %d\n", red,

green, blue, alpha, result);

#define FORMAT "/home/defaultuser/Documents/Development/gecko/

frame%03d.dat"

// Export out the pixel data

int const len = 61 + 10;

char filename[61 + 10];

snprintf(filename, len, FORMAT, filecount);

FILE *fh = fopen(filename, "w");

fwrite(buf, sizeof(char), bufferSize, fh);

fclose(fh);

free(buf);

filecount += 1;

}

count += 1;

After building and running the code I get some sensible looking output. As you can see there are sixteen frames generated before I quit the application. The first five look empty, but eventually some colours start coming through.

frame000.data: Colour before: (0, 0, 0, 255), 1 frame001.data: Colour before: (0, 0, 0, 255), 1 frame002.data: Colour before: (0, 0, 0, 255), 1 frame003.data: Colour before: (0, 0, 0, 255), 1 frame004.data: Colour before: (0, 0, 0, 255), 1 frame005.data: Colour before: (187, 125, 127, 255), 1 frame006.data: Colour before: (67, 115, 196, 255), 1 frame007.data: Colour before: (18, 0, 240, 255), 1 frame008.data: Colour before: (162, 225, 0, 255), 1 frame009.data: Colour before: (128, 202, 255, 255), 1 frame010.data: Colour before: (0, 0, 0, 255), 1 frame011.data: Colour before: (240, 255, 255, 255), 1 frame012.data: Colour before: (255, 159, 66, 255), 1 frame013.data: Colour before: (68, 115, 196, 255), 1 frame014.data: Colour before: (0, 0, 0, 255), 1 frame015.data: Colour before: (0, 192, 7, 255), 1

After copying the frame data from my phone over to my laptop I'm able to load them into GIMP (the GNU Image Manipulation Package) using the raw plugin. This is activated for files with a .data extension and allows the data to be loaded in as if it were pure pixel data without any header or metadata. Because there's no header you have to specify certain parameters manually, such as the width, height and format of the image data.

I always forget exactly what the dimensions of the screens on my development Xperia 10 II devices are, but thankfully GSMArena is only a few clicks away to check:

Resolution: 1080 x 2520 pixels, 21:9 ratio (~457 ppi density)

Adding the dimensions into the raw data loader seems to do the trick.

The code I added to gecko requested a texture format of RGBA, so that's what I need to use when loading the data in. Sadly the results are not what I had hoped. There are clearly some data related to the render and it's worth noting that the buffer where these are stored is initialised to contain zeroes each frame, so the data is real, not just artefacts from the memory or previous render.

But most of the pixels are black, the colours are wrong and the images seem to be mangled in very strange ways as you can see in the screenshots.

It's too late for me to figure this out tonight so I'll have a think about it overnight and come back to it in the morning.

As always, if you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

30 Apr 2024 : Day 232 #

I spent a bit of time yesterday trying to extract colour data from the SharedSurface_EGLImage object that should, as far as I understand it, be providing the raster image to be rendered to the screen. My plan has been to override the SharedSurface::ReadPixels() method — a method that currently just returns false and does nothing more — in order to make it perform the useful task of returning colour information. Since I've already set up the code to call this, once this method is working everything else should magically fall in to place.

I tried adding code into the method body to perform the task like this:

Thankfully the Stack Overflow post has an alternative approach with methods that are all shown to already be dynamically loaded in GLContext. Consequently I've updated the code to align with the suggested approach and it now looks like this:

Although all of the EGL symbols used in the code I've added exist and are technically usable, not all of them are available because of the way visibility of them is configured in GLContext. The raw_fBindFramebuffer() and raw_fDeleteFramebuffers() methods are both marked as private. I should explain that this is because these are all the raw variants of the methods. There are non-raw versions which have public visibility, but these have additional functionality I want to avoid triggering. I really do just want to use the raw versions.

To make them accessible to SharedSurface_EGLImage outside of GLContext I've therefore hacked their visibility like so:

Having made all of these changes it's time to test things out. I've built and uploaded the code, and set the WebView app running:

But I'd like to have some proper confirmation, so tomorrow I might see if I can get a full copy of the texture written out to disk so I can see what's really happening there.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

I tried adding code into the method body to perform the task like this:

bool SharedSurface_EGLImage::ReadPixels(GLint x, GLint y, GLsizei width,

GLsizei height,

GLenum format, GLenum type, GLvoid* pixels) {

const auto& gl = GLContextEGL::Cast(mDesc.gl);

gl->fBindTexture(LOCAL_GL_TEXTURE_2D, mProdTex);

gl->fGetTexImage(LOCAL_GL_TEXTURE_2D, 0, format, type, pixels);

return true;

}

But I hit a problem when the glGetTexImage() symbol, needed for the call to fGetTexImage(), wasn't available to load dynamically from the EGL library. The error kept on coming back:

Can't find symbol 'glGetTexImage'.

Crash Annotation GraphicsCriticalError: |[0][GFX1-]: Failed to create

EGLConfig! (t=1.32158) [GFX1-]: Failed to create EGLConfig!

As I'm sure many of you will have noted, there is in fact no support for glGetTexImage() in EGL, as is explained very succinctly on Stack Overflow. So it doesn't matter how many different permutations I was going to try, this was never going to work.Thankfully the Stack Overflow post has an alternative approach with methods that are all shown to already be dynamically loaded in GLContext. Consequently I've updated the code to align with the suggested approach and it now looks like this:

bool SharedSurface_EGLImage::ReadPixels(GLint x, GLint y, GLsizei width,

GLsizei height,

GLenum format, GLenum type, GLvoid* pixels) {

const auto& gl = GLContextEGL::Cast(mDesc.gl);

// See https://stackoverflow.com/a/53993894

GLuint fbo;

gl->raw_fGenFramebuffers(1, &fbo);

gl->raw_fBindFramebuffer(LOCAL_GL_FRAMEBUFFER, fbo);

gl->fFramebufferTexture2D(LOCAL_GL_FRAMEBUFFER, LOCAL_GL_COLOR_ATTACHMENT0,

LOCAL_GL_TEXTURE_2D, mProdTex, 0);

gl->raw_fReadPixels(x, y, width, height, format, type, pixels);

gl->raw_fBindFramebuffer(LOCAL_GL_FRAMEBUFFER, 0);

gl->raw_fDeleteFramebuffers(1, &fbo);

return true;

}

This doesn't seem to be entirely what I want because it's really just copying the texture data back into a framebuffer and reading from there, which is very similar to what I've already done but in a slightly different place in the code. But this at least brings us one step close to the screen, which is where this all eventually is supposed to end up.Although all of the EGL symbols used in the code I've added exist and are technically usable, not all of them are available because of the way visibility of them is configured in GLContext. The raw_fBindFramebuffer() and raw_fDeleteFramebuffers() methods are both marked as private. I should explain that this is because these are all the raw variants of the methods. There are non-raw versions which have public visibility, but these have additional functionality I want to avoid triggering. I really do just want to use the raw versions.

To make them accessible to SharedSurface_EGLImage outside of GLContext I've therefore hacked their visibility like so:

//private:

public:

void raw_fBindFramebuffer(GLenum target, GLuint framebuffer) {

BEFORE_GL_CALL;

mSymbols.fBindFramebuffer(target, framebuffer);

AFTER_GL_CALL;

}

[...]

// TODO: Make private again

public:

void raw_fDeleteFramebuffers(GLsizei n, const GLuint* names) {

BEFORE_GL_CALL;

mSymbols.fDeleteFramebuffers(n, names);

AFTER_GL_CALL;

}

private:

void raw_fDeleteRenderbuffers(GLsizei n, const GLuint* names) {

BEFORE_GL_CALL;

mSymbols.fDeleteRenderbuffers(n, names);

AFTER_GL_CALL;

}

[...]

This wouldn't be especially noteworthy or interesting were it not for the fact that the changes I'm making are only temporary while I debug things, so I'll want to change them back before I commit any changes to the public repository. As we've already seem before, taking a note in these diary entries of things I need to reverse is a great way for me to keep track and make sure I can reverse the changes as easily as possible.Having made all of these changes it's time to test things out. I've built and uploaded the code, and set the WebView app running:

[JavaScript Warning: "Layout was forced before the page was fully loaded.

If stylesheets are not yet loaded this may cause a flash of unstyled

content." {file: "https://sailfishos.org/wp-includes/js/jquery/

jquery.min.js?ver=3.5.1" line: 2}]

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (0, 0, 0, 255), 1

[...]

Colour after: (107, 79, 139, 255), 1

Colour before: (137, 207, 255, 255), 1

Colour after: (137, 207, 255, 255), 1

Colour before: (135, 205, 255, 255), 1

Colour after: (135, 205, 255, 255), 1

Colour before: (132, 204, 254, 255), 1

Colour after: (132, 204, 254, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (10, 144, 170, 255), 1

Colour after: (10, 144, 170, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

Colour before: (59, 128, 13, 255), 1

Colour after: (59, 128, 13, 255), 1

Colour before: (108, 171, 244, 255), 1

Colour after: (108, 171, 244, 255), 1

Colour before: (185, 132, 119, 255), 1

Colour after: (185, 132, 119, 255), 1

Colour before: (0, 0, 0, 255), 1

Colour after: (0, 0, 0, 255), 1

[...]

As you can see, the surface starts off fully black but gets rendered until eventually it's a mix of colours. There are still a lot of cases where the pixels are just black, which makes me a bit nervous, but the colours in general look intentional. I think this is a good sign.But I'd like to have some proper confirmation, so tomorrow I might see if I can get a full copy of the texture written out to disk so I can see what's really happening there.

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

29 Apr 2024 : Day 231 #

I'm still in the process of trying to extract pixel colours from the surface used for transferring the offscreen buffer to the onscreen render. The snag I hit yesterday is that I wanted to use glGetTexImage(), but this symbol isn't being dynamically loaded for use with GLContext.

Consequently I've added it to the end of the coreSymbols table like this:

At least I may as well build it and find out.

Now when I run it I get this:

This error message appears twice in the code so I can't be entirely certain, but it looks like the error is resulting in a failure in the following method:

If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.

Comment

Consequently I've added it to the end of the coreSymbols table like this:

const SymLoadStruct coreSymbols[] = {

{ (PRFuncPtr*) &mSymbols.fActiveTexture, {{

"glActiveTexture", "glActiveTextureARB" }} },

{ (PRFuncPtr*) &mSymbols.fAttachShader, {{ "glAttachShader",

"glAttachShaderARB" }} },

{ (PRFuncPtr*) &mSymbols.fBindAttribLocation, {{

"glBindAttribLocation", "glBindAttribLocationARB" }} },

{ (PRFuncPtr*) &mSymbols.fBindBuffer, {{ "glBindBuffer",

"glBindBufferARB" }} },

{ (PRFuncPtr*) &mSymbols.fBindTexture, {{ "glBindTexture",

"glBindTextureARB" }} },

[...]

{ (PRFuncPtr*) &mSymbols.fGetTexImage, {{ "glGetTexImage",

"glGetTexImageARB" }} },

END_SYMBOLS

};

I've also searched through the rest of the code for glBindBuffer, which is the reference I'm using for how to introduce glGetTexImage. Although it comes up in many places in the code, none of them look relevant to what I'm doing, so I'm going to guess that the change I've made above is all that's needed.At least I may as well build it and find out.

Now when I run it I get this:

Created LOG for EmbedLiteLayerManager

Can't find symbol 'glGetTexImage'.

Crash Annotation GraphicsCriticalError: |[0][GFX1-]: Failed to create

EGLConfig! (t=1.32158) [GFX1-]: Failed to create EGLConfig!

Segmentation fault

That's not a great sign. I'm going to have to put some more thought into this.This error message appears twice in the code so I can't be entirely certain, but it looks like the error is resulting in a failure in the following method:

bool CreateConfig(EglDisplay& egl, EGLConfig* aConfig, int32_t depth,

bool aEnableDepthBuffer, bool aUseGles, int aVisual);

At this stage I'm not really sure what I'm doing, but I've changed my earlier change so it now looks like this:

const SymLoadStruct coreSymbols[] = {

{ (PRFuncPtr*) &mSymbols.fActiveTexture, {{

"glActiveTexture", "glActiveTextureARB" }} },

{ (PRFuncPtr*) &mSymbols.fAttachShader, {{ "glAttachShader",

"glAttachShaderARB" }} },

{ (PRFuncPtr*) &mSymbols.fBindAttribLocation, {{

"glBindAttribLocation", "glBindAttribLocationARB" }} },

{ (PRFuncPtr*) &mSymbols.fBindBuffer, {{ "glBindBuffer",

"glBindBufferARB" }} },

{ (PRFuncPtr*) &mSymbols.fBindTexture, {{ "glBindTexture",

"glBindTextureARB" }} },

[...]

{ (PRFuncPtr*) &mSymbols.fGetTexImage, {{ "glGetTexImage" }}

},

END_SYMBOLS

};

Sadly after rebuilding, re-uploading and re-running, that didn't fix it. I'm out of ideas for today, but maybe after a good night's sleep I'll have some better ideas tomorrow. Let's see in the morning.If you'd like to read any of my other gecko diary entries, they're all available on my Gecko-dev Diary page.